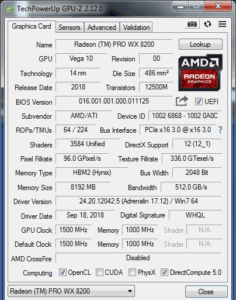

AMD has recently released a new high end professional GPU as part of their RadeonPro line. The RadeonPro WX8200 is based on the Vega architecture, with 3584 compute cores accessing 8GB of HBM2 memory at up to 512GB/sec. It is roughly equivalent hardware specs to their $400 Vega 56 gaming card, but with professional drivers tuned for optimized performance in a variety of high end 3D applications. AMD is marketing it as an option between the Quadro P4000 and P5000 from NVidia, priced at $999.

AMD GPUs

AMD GPUs

First off, I have never really used an AMD GPU before, at least not since they bought ATI over 10 years ago. My first Vaio laptop had an ATI Radeon 7500 in it back in 2002, and we used ATI Radeon cards in our Matrox AXIO LE systems at Bandito Brothers in 2006, right around the time ATI got acquired by AMD. My last AMD based CPUs were Opterons in HP XW9300 workstations in the same time period. But we were already headed towards NVidia GPUs when Adobe released Premiere Pro CS5 in early 2010. CS5’s CUDA based GPU accelerated Mercury Playback engine locked us in to NVidia GPUs for years to come. Adobe eventually included support for OpenCL as an alternative acceleration for Mercury, primarily due to the Mac hardware options available in 2014, but it was never as mature or reliable on Windows as the CUDA based option. By that point we were already heavily invested in NVidia hardware, and used to using it, so we continued on that trajectory.

I have a good relationship with many people at NVidia, and have reviewed many of their cards over the years. Starting back in 2008, their Quadro CX card was the first piece of hardware I was ever provided with for the explicit purpose of reviewing, instead of just writing about the products I was already using at work. So I had to pause for a moment and take stock of whether I could really do an honest and unbiased review of an AMD card. But what if they worked just as well as the NVidia cards I was used to? That would really open up my options when selecting a new laptop, as most of the lighter weight options have had AMD GPUs for the last few years (If any at all, and integrated graphics is not an option for my use case.) Plus that would be useful information and experience to have as I was about to outfit a new edit facility with systems, and more options is always good when you are finding ways to cut costs without sacrificing performance or stability.

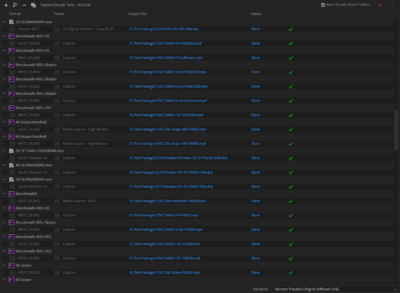

So I agreed to review this new card, and run it through the same tests I use for my Quadro reviews. Ideally I would have a standard set of assets and timelines that I could use every time I needed to evaluate the performance of new hardware, and just compare it to my existing records from previous tests. But the tests run in software that is changing as well, and Premiere Pro was on version 11 when I tested the Pascal Quadros, while version 13 is out now. Plus I was testing 6K files then, and have lots of 8K assets now, as well as a Dell UP3218K monitor to view them on. And just driving images to an 8K monitor smoothly is a decent test for a graphics card as well. So I end up bench-marking not just the new AMD card, but all of the other (NVidia) cards I have on hand for comparison, leading to quite a project.

So I agreed to review this new card, and run it through the same tests I use for my Quadro reviews. Ideally I would have a standard set of assets and timelines that I could use every time I needed to evaluate the performance of new hardware, and just compare it to my existing records from previous tests. But the tests run in software that is changing as well, and Premiere Pro was on version 11 when I tested the Pascal Quadros, while version 13 is out now. Plus I was testing 6K files then, and have lots of 8K assets now, as well as a Dell UP3218K monitor to view them on. And just driving images to an 8K monitor smoothly is a decent test for a graphics card as well. So I end up bench-marking not just the new AMD card, but all of the other (NVidia) cards I have on hand for comparison, leading to quite a project.

The Hardware

The first step was to install the card in my Dell Precision 7910 workstation. Slot-wise, it just dropped into the location usually occupied by my Quadro P6000. It takes up two slots, with a single PCIe 3.0 x16 connector. It also requires both a 6pin and 8pin PCIe power connector, which I was able to provide, with a bit of reconfiguration. Externally it has 4 MiniDisplayPort connectors, and nothing else. Dell has an ingenious system of shipping DP to mDP cables with their monitors that have both ports, allowing either source port to be used by reversing the cable.

The first step was to install the card in my Dell Precision 7910 workstation. Slot-wise, it just dropped into the location usually occupied by my Quadro P6000. It takes up two slots, with a single PCIe 3.0 x16 connector. It also requires both a 6pin and 8pin PCIe power connector, which I was able to provide, with a bit of reconfiguration. Externally it has 4 MiniDisplayPort connectors, and nothing else. Dell has an ingenious system of shipping DP to mDP cables with their monitors that have both ports, allowing either source port to be used by reversing the cable.  But that didn’t apply to my dual full sized DisplayPort UP3218K monitor, and I didn’t realize until after ordering MiniDP-to-Diplayport cables, that I already had some from my PrevailPro review for the same reason. I prefer the full sized connectors, to ensure I don’t try to plug them in backwards, especially since AMD didn’t use the space savings to include any other ports on the card. (HDMI, USB-C, etc.) I also tried the card in an HP Z4 workstation a few days later, to see if the Windows 10 drivers were any different, so those notes are included throughout.

But that didn’t apply to my dual full sized DisplayPort UP3218K monitor, and I didn’t realize until after ordering MiniDP-to-Diplayport cables, that I already had some from my PrevailPro review for the same reason. I prefer the full sized connectors, to ensure I don’t try to plug them in backwards, especially since AMD didn’t use the space savings to include any other ports on the card. (HDMI, USB-C, etc.) I also tried the card in an HP Z4 workstation a few days later, to see if the Windows 10 drivers were any different, so those notes are included throughout.

The Drivers

Once I had my monitors hooked up, I booted the system to see what happened. I was able to install the drivers, and reboot for full functionality, without issue. The driver install is a two part process, where first you install AMD’s display software, and then that software allows you to install a driver. I like this approach, as it allows you to change your driver version without reinstalling all of the other supporting software. The fact that driver packages these days are over 500MB is a bit ridiculous, especially for those of us not fortunate enough to live in areas where fiber internet connections are available. Hopefully this approach can alleviate that issue a bit. AMD advertises that this functionality also allows you to switch driver versions without rebooting, and their RadeonPro line fully supports their gaming drivers as well. This can be an advantage for a game developer who uses the professional feature set for their work, but then wants to test the consumer experience of his work without having a separate dedicated system. Or just people who want better gaming performance on their dual use systems.

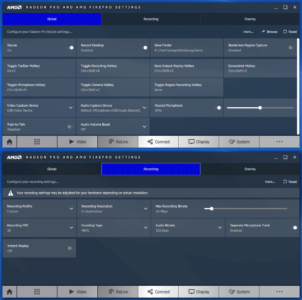

The other feature I liked in their software package is screen capture functionality called RadeonPro ReLive. It records all onscreen images, or specific window selections, as well as application audio, and optionally microphone audio. It saves the screen recordings to AVC or HEVC files generated by the VCE 4.0 hardware video compression engine on the card. When I tested, it worked as expected, and the captured files looked good, including a separate audio file for my microphone voice, while the system audio was embedded in the video recording. This is a great tool for making software tutorials, or similar type tasks, and I intend to use it in the near future for posting videos of my project workflows. NVidia offers similar functionality in the form of ShadowPlay, but doesn’t market it to professionals, as it is part of the GeForce Experience software. I tested for comparison, and it does work on Quadro cards, but has fewer options and controls. Nvidia should take the cue from AMD here, and develop a more professional solution for their users who need this functionality.

I used the card with both my main curved 34″ monitor at 3440×1440, and my 8K monitor at 7680×4320. The main display worked perfectly the entire time, but the 8K one gave me a lot of issues. I went through lots of tests on both OSes, with various cables and drivers, before discovering that a firmware update for the monitor solved the issues. So if you have a UP3218K, take the time to update the firmware for maximum GPU compatibility. My HDMI based home theater system on the other hand worked perfectly and even allowed me access to the 5.1 speaker in Premiere through the AMD HDMI audio drivers.

I used the card with both my main curved 34″ monitor at 3440×1440, and my 8K monitor at 7680×4320. The main display worked perfectly the entire time, but the 8K one gave me a lot of issues. I went through lots of tests on both OSes, with various cables and drivers, before discovering that a firmware update for the monitor solved the issues. So if you have a UP3218K, take the time to update the firmware for maximum GPU compatibility. My HDMI based home theater system on the other hand worked perfectly and even allowed me access to the 5.1 speaker in Premiere through the AMD HDMI audio drivers.

10 Bit Display Support

10 Bit Display Support

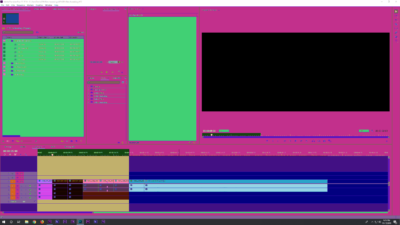

One of the main reasons to get a “professional” GPU over a much cheaper gaming card, is that they support 10 bit color in professional applications, instead of just in full screen outputs like games and video playback that are supported at 10bit on consumer GPUs. But when I enabled 10bit mode in the RadeonPro Advanced panel, I ran into some serious issues. On Windows 7, it disabled the view ports on most of my professional apps, like Premiere, After Effects, and Character Animator. When I enabled it on a Windows 10 system, to see if it worked any better in a newer OS, the Adobe application interfaces looked even crazier (pictured), and still no video playback. I was curious to see if my Print Screen captures would still look this way once I disabled the 10bit setting, because in theory, even after seeing them that way when I pasted them into a Photoshop doc, that could still be a display distortion of proper looking screen capture. But no, the screen captures look exactly how the interface looked on my display. AMD is aware of this issue, and expects it to be fixed in the next driver release.

Render Performance

Render Performance

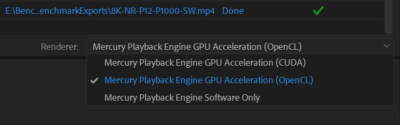

At this point it came down to analyzing the card’s performance, and seeing what it could do. Now GPUs are actually designed to accelerate the calculations required to display 3D graphics, but that processing capacity can be used in other ways. I don’t do much true 3D processing besides the occasional FPS game, so my GPU utilization is all for image processing in video editing and visual effects. This can be accelerated by AMD’s GPUs through the OpenCL (Compute Language) framework (as well as through Metal on the Mac side). My main application Premiere Pro 12, explicitly supports OpenCL acceleration, as do After Effects and Media Encoder. So I opened them up, and started working. I didn’t see a huge difference in interface performance, even when pushing high quality files around, but that is a fairly subjective test, and fairly intermittent. I might drop frames during playback one time, but not the next time. Render time is a much more easily quantifiable measure of computational performance, so I created a set of sequences to render in the different hardware configuration for repeatable tests. I am pretty familiar with which operations are CPU based and which run on the GPU, so I made a point of creating test projects that work the GPUs as much as possible, based on the clip resolution, codec, and selection of accelerated effects, to highlight the performance differences in that area. I rendered those sequences with OpenCL acceleration enabled on the WX8200, with all GPU acceleration disabled in Mercury software playback mode, and then with a number of different NVidia GPUs for comparison. Trying to push the cards as hard as possible, I used 6K Venice files and 8K Red files, with Lumetri grades and other GPU effects applied, and then exported them to H.265 files at 10bit Rec.2020 in UHD and 8K. (I literally named the 8K sequence “Torture Test.)

My initial tests favored NVidia GPUs by a factor of at least 3-1, which was startling, and I ran them repeatedly to verify with the same result. Further tests and research revealed that usually AMD (and OpenCL) was about 25% slower than Adobe’s CUDA mode on similarly priced hardware, verified by a variety of other sources. But my results were made worse for two reasons, one being that RED decoding is currently more optimized for acceleration on NVidia cards, and because rendering at 10bit ground the AMD accelerated OpenCL renders to a halt. When exporting 10bit HDR files at “Maximum Bit Depth,” it took up to 8 times as long to finish rendering. Clearly this was a bug, but it took a lot of experimentation to narrow it down, and the Intel based OpenCL acceleration doesn’t suffer from the same issue. Once I was able to test the newest Media Encoder 13 release on the Windows 10 system, the 10bit performance hit when using AMD disappeared. When I removed the Red source footage, and exported 8bit HEVC files, the WX8200 was just as fast as any of my NVidia cards. (P4000 and P6000) When I was sourcing from Red footage, the AMD took twice as long, but GPU based effects seemed to have no effect on render time, so those are properly accelerated by the card.

| Render Test 8bit/10Bit HDR | RadeonPro WX8200 | Quadro P4000 | GPU Disabled | Quadro P1000 Laptop | RadeonPro WX8200 AME13 |

|---|---|---|---|---|---|

| Red-4K to HEVC | 3:06/26:21 | 2:29/4:56 | 8:32/62:00 | 10:04/30:30 | 3:03/6:47 |

| Red-4K FX to HEVC | 3:54/41:57 | 2:52/5:01 | 18:20/70:10 | 11:25/49:04 | 2:47/6:11 |

| X-OCN to 4K HEVC | 0:53/2:23 | 0:55/3:09 | 4:52/30:48 | 4:02/4:59 | 1:15/3:05 |

| X-OCN to 8K HEVC | 4:31 | 4:29 | 30:12 | 4:20 | 4:58 |

| DNxHR to DCP | 2:38 | 2:21 | 2:02 | 2:46 | 2:35 |

| 6K Red with Rocket | 0:56/7:00 | 1:33/1:48 | NotTested | NotTested | NotTested |

So basically as long as you aren’t using Red source, and you use Premiere Pro and Media Encoder 13 or newer, this card is comparable to the alternatives for most AME renders. Very detailed benchmarks are available here.

Laptop eGPU Test

Laptop eGPU Test

I also wanted to try the WX8200 in my new Thunderbolt attached eGPU, the Sonnet Breakaway Box, but I was a bit concerned about the potential driver conflict, since my testing laptop, the ZBook x360, has an integrated NVidia Quadro P1000 in it. Because I had heard of issues in the past with mixing drivers, I did some research online before just giving it a shot. I didn’t see any major reports of problems, even from people who claimed to have both AMD and NVidia GPUs in their systems for various reasons. And my Dell workstation had performed seamlessly with both sets of drivers installed, so I didn’t have to reinstall everything each time I ran a different card to test. The WX8200 didn’t specifically support eGPU configurations, but I decided to give it a shot, and installed the card in the Breakaway Box, and hooked it up. It recognized the hardware, and I started to install the drivers. This resulted in a crash, and refusal to boot or repair until I found a way to manually remove the AMD drivers with the pre-boot command line. Then the system booted up just fine, and I wiped the rest of the AMD software and decided to stick with officially supported configurations from then on. Lesson of the day: don’t mix AMD and NVidia GPUs and drivers.

VR and True 3D Workloads

I got a chance to try the WX8200 card in another Windows 10 system, which allowed me to test it with my Lenovo Explorer WMR headset, to test out its VR capabilities. That is an area where it should shine, because in most cases VR is a true 3D processing workload. This test was one of the main things I was hoping to accomplish with the eGPU installation with my Windows 10 laptop. I was able to get it to work with Windows Mixed Reality, SteamVR, and Adobe Immersive Environment. Editing 360 videos looked great in Premiere. One issue I encountered was that both Premiere 12 and 13 would crash every time I closed them after editing 360 videos, which no longer happened when I swapped out the card, but that didn’t affect my work in the apps.

The only true 3D performance test I have access to (besides 3D games) is Maxon’s Cinebench. Only the OpenGL benchmark really relies on the GPU, so it is not as useful here as when I test notebooks and workstations with it, but it still gives us a comparison of potential performance.

| Maxon Cinebench | OpenGL Score |

|---|---|

| RadeonPro WX8200 | 156 |

| Quadro P4000 | 176 |

| GeForce RTX2080Ti | 134 |

So do you need a RadeonPro WX8200?

That depends on what type of work you do. Basically I am a Windows based Adobe editor, and Adobe has spent a lot of time optimizing their CUDA accelerated Mercury playback engine for Premiere Pro in Windows, so that is reflected in how well the NVidia cards perform for my renders, especially with version 12, which is the final release on Windows 7. Avid or Resolve may have different results, and even Premiere Pro on OSX may perform much better with AMD GPUs due to the Metal framework optimizations in that version of the program. It is not because the card is necessarily “slower,” it just isn’t being utilized as well by my software. NVidia has also invested a lot of effort into making CUDA a framework that applies to tasks beyond 3D calculations. AMD on the other hand has focused their efforts directly on 3D rendering, with things like ProRender, which GPU accelerates true 3D renders. If you are doing traditional 3D work, either animation or CAD projects, this card will probably be much more suitable for you than it is for me. So while I won’t be switching over to this card any time soon, that doesn’t mean that it might not be the optimal card for other users with different needs.

That depends on what type of work you do. Basically I am a Windows based Adobe editor, and Adobe has spent a lot of time optimizing their CUDA accelerated Mercury playback engine for Premiere Pro in Windows, so that is reflected in how well the NVidia cards perform for my renders, especially with version 12, which is the final release on Windows 7. Avid or Resolve may have different results, and even Premiere Pro on OSX may perform much better with AMD GPUs due to the Metal framework optimizations in that version of the program. It is not because the card is necessarily “slower,” it just isn’t being utilized as well by my software. NVidia has also invested a lot of effort into making CUDA a framework that applies to tasks beyond 3D calculations. AMD on the other hand has focused their efforts directly on 3D rendering, with things like ProRender, which GPU accelerates true 3D renders. If you are doing traditional 3D work, either animation or CAD projects, this card will probably be much more suitable for you than it is for me. So while I won’t be switching over to this card any time soon, that doesn’t mean that it might not be the optimal card for other users with different needs.