NVidia had their big GPU Technology Conference this week in San Jose. As the third largest company in the world (by market capitalization value) NVidia’s profile has risen dramatically over the last couple years, primarily due to their position in the datacenter and AI processing world. As such, their flagship conference becomes less “graphics” related every year, as their products push more into those other spaces. This year had very few media and entertainment focused sessions, and even those where usually very AI centric. Generative AI is where graphics and AI meet, and some amazing work is being done in that area, but I would still classify much of that as “art” as opposed to “storytelling” as the tools have not matured that far yet, to give the user enough control over the process to tightly steer the end result. My friend just won an AI film festival, but optimizing for the tools available was still steering their workflow more than their story was.

Now events like GTC are how those tools get improved, but this conference is more for the people developing those tools, not the people using them. So it is good to know what is coming, but there is still a lag time between when new technologies are announced, and when they are ready to be used in the marketplace. And this is the first GTC occurring in person in San Jose since the pandemic moved everything online in 2020. Jensen Huang gave the keynote presentation at SAP Center in San Jose, which much larger than the previous venues within the convention center, which I attended in the past.

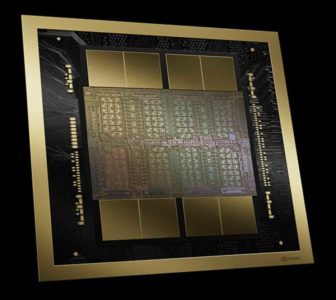

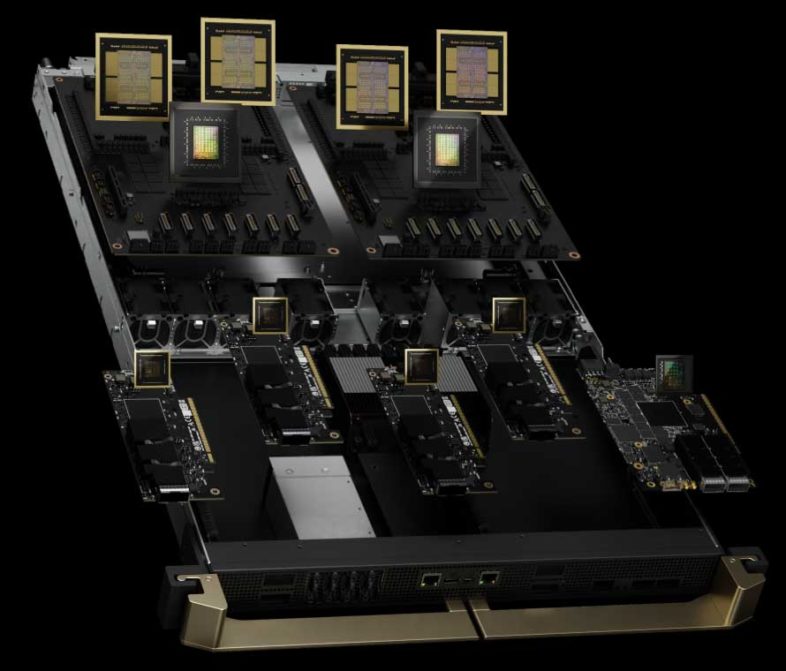

The main focus of his presentation was the new Blackwell architecture for supercomputers. No mention was given to putting that technology into a PCIe card, although it is my understanding that that is what will be coming next. The presentation hardly even referenced the SMX socket version for existing GPU computers, and primarily focused on the GB200 Grace Blackwell NVL platform, which combines two Blackwell dual die GPUs with a single existing Grace CPU with 72 ARM Cores. The entire board has nearly 1TB of memory, and 16TB of memory bandwidth. The new DGX cluster, GB200 NVL72, has 72 Blackwell GPUs, across 18 NVLink connected nodes, with two boards in each node, as well as four 800Gb NICs, in a watercooler MGX enclosure, with a dedicated Switch. That Rack offers 720PetaFLOPs of FP8 computing power, at 120KiloWatts of power usage. That is 10PetaFLOPs per GPU, which is 2.5x more than the previous generation Hopper chips, and far more than Ada. (They pointed out that the first DGX had .017TeraFLOPs six years ago, so this is a 4000x improvement for AI processing via Tensor Cores.)

The main focus of his presentation was the new Blackwell architecture for supercomputers. No mention was given to putting that technology into a PCIe card, although it is my understanding that that is what will be coming next. The presentation hardly even referenced the SMX socket version for existing GPU computers, and primarily focused on the GB200 Grace Blackwell NVL platform, which combines two Blackwell dual die GPUs with a single existing Grace CPU with 72 ARM Cores. The entire board has nearly 1TB of memory, and 16TB of memory bandwidth. The new DGX cluster, GB200 NVL72, has 72 Blackwell GPUs, across 18 NVLink connected nodes, with two boards in each node, as well as four 800Gb NICs, in a watercooler MGX enclosure, with a dedicated Switch. That Rack offers 720PetaFLOPs of FP8 computing power, at 120KiloWatts of power usage. That is 10PetaFLOPs per GPU, which is 2.5x more than the previous generation Hopper chips, and far more than Ada. (They pointed out that the first DGX had .017TeraFLOPs six years ago, so this is a 4000x improvement for AI processing via Tensor Cores.)

It is difficult to accurately compute the differences between this and existing GPUs, because they are no longer benchmarking with the same measurements, as AI uses FP8 and now FP4, which exaggerates the performance numbers compared to traditional FP32 bit computations in graphics. Best I can tell Ada PCIe GPUs peak at 600TeraFLOPs of FP32 Tensor processing, while if the patterns follow the usual formula, this new Blackwell chip should be able to do 2500 TeraFLOPs at FP32 Precision, so a 4x improvement. This is further complicated by the transition from TeraFLOPs (Trillion) to PetaFLOPs (Quadrillion) but suffice to say that the new chips are really fast as technology has advanced. I would predict that a new PCIe card based on this architecture should have 2-3 times the overall performance of current Ada based GPUs. But based on the previous Hopper and Ada Lovelace architecture branching, this may not become the basis for a new GPU at all, and we might be waiting for a separate development there.

It is difficult to accurately compute the differences between this and existing GPUs, because they are no longer benchmarking with the same measurements, as AI uses FP8 and now FP4, which exaggerates the performance numbers compared to traditional FP32 bit computations in graphics. Best I can tell Ada PCIe GPUs peak at 600TeraFLOPs of FP32 Tensor processing, while if the patterns follow the usual formula, this new Blackwell chip should be able to do 2500 TeraFLOPs at FP32 Precision, so a 4x improvement. This is further complicated by the transition from TeraFLOPs (Trillion) to PetaFLOPs (Quadrillion) but suffice to say that the new chips are really fast as technology has advanced. I would predict that a new PCIe card based on this architecture should have 2-3 times the overall performance of current Ada based GPUs. But based on the previous Hopper and Ada Lovelace architecture branching, this may not become the basis for a new GPU at all, and we might be waiting for a separate development there.

On the software side, the biggest news was the idea of NVidia Inference Microservices or NIMs, which would be prepackaged and trained AI software tools that could be easily deployed and combined to better utilize all of this new computing hardware. They also have tools for Streaming Omniverse Cloud data directly to Apple’s new Vision Pro Headsets, which should add value to both products.

On the software side, the biggest news was the idea of NVidia Inference Microservices or NIMs, which would be prepackaged and trained AI software tools that could be easily deployed and combined to better utilize all of this new computing hardware. They also have tools for Streaming Omniverse Cloud data directly to Apple’s new Vision Pro Headsets, which should add value to both products.

I also watched a session where a panel from the Vegas Sphere team discussed the workflow that they use to create content for that venue. The a moving massive amounts of data around, to drive a 16K x 16K display with uncompressed 12bit imagery, some of which is generated in real-time. They use NVidia both for the 100GbE networking to transport their 2110 streams, and for the graphics processing o

But NVidia has also released some other new products over the last few months that may be more immediately relevant to users in the M&E space. The RTX 2000 Ada is a lower end Ada generation PCIe based GPU that fits in a half height half length dual slot space, with a PCIe x8 4.0 connector, for 1U and 2U rack mount systems or other small formfactor cases. With 16GB RAM and 12TeraFLOPs performance, it is 50% faster than the previous Ampere RTX2000, and on par with the original Turing based RTX5000. It is probably enough GPU power for most editors, but not for 3D artists. There are also new lower end Ada based mobile GPUs in the RTX 500 and RTX 1000 for laptops. Low end GPUs didn’t used to get my attention, but as processors have gotten more powerful, a lower end GPU is enough for many users, and the options are worth exploring. With 2K CUDA cores, and 9TeraFLOPs of performance, even the lowest RTX 500 has respectable performance. People still editing in HD or even UHD, who aren’t using HDR or floating point processing, may be well served by a basic RTX GPU that still has hardware acceleration for codecs, and dedicated video memory, without thousands of CUDA cores. It offers a cheaper solution that meets their needs while offering lighter weight systems with longer battery life.

But NVidia has also released some other new products over the last few months that may be more immediately relevant to users in the M&E space. The RTX 2000 Ada is a lower end Ada generation PCIe based GPU that fits in a half height half length dual slot space, with a PCIe x8 4.0 connector, for 1U and 2U rack mount systems or other small formfactor cases. With 16GB RAM and 12TeraFLOPs performance, it is 50% faster than the previous Ampere RTX2000, and on par with the original Turing based RTX5000. It is probably enough GPU power for most editors, but not for 3D artists. There are also new lower end Ada based mobile GPUs in the RTX 500 and RTX 1000 for laptops. Low end GPUs didn’t used to get my attention, but as processors have gotten more powerful, a lower end GPU is enough for many users, and the options are worth exploring. With 2K CUDA cores, and 9TeraFLOPs of performance, even the lowest RTX 500 has respectable performance. People still editing in HD or even UHD, who aren’t using HDR or floating point processing, may be well served by a basic RTX GPU that still has hardware acceleration for codecs, and dedicated video memory, without thousands of CUDA cores. It offers a cheaper solution that meets their needs while offering lighter weight systems with longer battery life.

On the software release front, NVidia has revamped their GeForce Experience (as well as the more professionally focused Quadro/RTX Experience) control center into an entirely new unified NVidia App. It is a totally new UI, and significantly, no longer requires an online account and login to use basic features of the card. Having tried it, I think it is a welcome improvement over the previous solution.

On the software release front, NVidia has revamped their GeForce Experience (as well as the more professionally focused Quadro/RTX Experience) control center into an entirely new unified NVidia App. It is a totally new UI, and significantly, no longer requires an online account and login to use basic features of the card. Having tried it, I think it is a welcome improvement over the previous solution.

NVidia also have recently released Chat for RTX, which is a large language module GPT type chat program that runs entirely on local RTX GPUs. You need to have a sufficient source of data to train it on, but this may be a way for people interested in AI to begin to experiment with creating their own models, instead of just using cloud based options that other people have trained. There is a lot to this AI transition, and NVidia and their products are at the center of the process.

NVidia also have recently released Chat for RTX, which is a large language module GPT type chat program that runs entirely on local RTX GPUs. You need to have a sufficient source of data to train it on, but this may be a way for people interested in AI to begin to experiment with creating their own models, instead of just using cloud based options that other people have trained. There is a lot to this AI transition, and NVidia and their products are at the center of the process.