My last post was about Adobe’s announcements and new releases at Adobe MAX. This one will focus on the other things that I did at the conference, and what it was like to attend in person. The first event was the Opening keynote, which focused on the announcements already covered, and the rest of that day was spent in various sessions. The second day’s Inspiration Keynote was less technical in nature, followed by another day of sessions, and then Adobe Sneaks, where they reveal new technology still in development. Between all of these events, there was the Creativity Park, a full hall of booths to explore from different groups within Adobe, as well as their partner companies showcasing both hardware and software solutions to extend the functionality of Adobe’s offerings. And then of course there was a big party to cap it all off.

Inspiration Keynote

Inspiration Keynote

I usually don’t have much to report from the day 2 keynote presentation, as it is rarely technical in detail, and mostly about soft skills that are hard to describe. But this year’s presentation did stand out to me in a number of ways. There were 4 different presenters with very different styles and messages, none of whom I had ever heard of, because that is not the space I occupy. First off was Aaron James Draplin, who is a graphic designer in Portland, with a very unique style, who would appear to pride himself in not fitting the mold of corporate success. His big, loud, autobiographical presentation was entertaining, but didn’t have much of a take away besides work hard, and you have a chance of your own unique success.

Second was Karen X Cheng, who is a social media artist, with some pretty innovative art, the technical aspects of which I am better able to appreciate. Her explicit mix of AI and real photography was powerful. She talked a lot about the algorithms that rule the social media space, and how they skew our perception of value. I thought her five defenses against the algorithm were important ideas:

Second was Karen X Cheng, who is a social media artist, with some pretty innovative art, the technical aspects of which I am better able to appreciate. Her explicit mix of AI and real photography was powerful. She talked a lot about the algorithms that rule the social media space, and how they skew our perception of value. I thought her five defenses against the algorithm were important ideas:

Money and Passion don’t always align – Pursue both, separately if necessary

Be proud of your flops – Likes and reshares aren’t the only measure of value

Seek respect, not attention, it lasts longer – this one is self explanatory

Human+AI > AI – AI is a powerful tool, but even more so in the hands of a skilled user

Take a sabbath from screens – It helps keep in perspective that there is more to life

Up next was Walker Noble, an artist who was able to find financial success selling his art when the pandemic pushed him out of his day job that he had previously been afraid to leave. He talked about taking risks, and self perception, asking ‘Why not Me?’ He also talked about finding your motivation, in his case his family, but there are other possible “why’s.” He also pointed out that he has found success without mastering “the algorithm” in that he has few social media followers or ‘influence’ in the online world. So: “Why not You?”

Last was Oak Felder, a music producer who talked about how to channel emotions through media, specifically music in his case. He made a case for the intrinsic emotional value within certain tones of music, as as opposed to learned associations, from movies and such. The way he sees it, there are “kernels of emotion” within music that are then shaped by a skilled composer or artist. And he advocated that the impact it has on others is the definition of truly making music, with a strong conclusion to his segment, in the form of a special needs child being soothed by one of his songs during a medical procedure. So the entire combined presentation has much stronger than the celebrity interview format of previous years.

Last was Oak Felder, a music producer who talked about how to channel emotions through media, specifically music in his case. He made a case for the intrinsic emotional value within certain tones of music, as as opposed to learned associations, from movies and such. The way he sees it, there are “kernels of emotion” within music that are then shaped by a skilled composer or artist. And he advocated that the impact it has on others is the definition of truly making music, with a strong conclusion to his segment, in the form of a special needs child being soothed by one of his songs during a medical procedure. So the entire combined presentation has much stronger than the celebrity interview format of previous years.

Individual Sessions

With close to 200 different sessions, and a finite amount of time, it can be a challenge to choose what you want to explore. I of course focused on video ones, and within that, specifically on topics that would help me better utilize After Effects’ newest 3D object functionality. I know a lot about navigating around in After Effects, what I was hoping to learn was how to prep assets for use in AE, but that was not covered very deeply. My one take away in that regard came from Robert Hranitzky in his Hollywood Look on a Budget session, where he talked about how GLB files will work better than the OBJ files I had been using, because they embed the textures and other info directly into the file for After Effects to use. He also showed how to break a model into separate parts to animate directly in After Effects, in a way that I had envisioned, but hadn’t had a chance to try in the beta releases yet.

Ian Robinson’s Move into the Next Dimension with AE was a bit more beginner focused, but he did point out that one of the unique benefits of Draft3D mode, is that the render is not cropped to the view window, giving you an over-scan effect, to see what you might be missing in your render perspective. He also did a good job of covering how the Cinema4D and Advanced3D render modes allow you to bend and extrude layers, and edit materials, while the Classic3D render mode does not. I have done most of my AE work in Classic mode for the last 2 decades, but I may use start using the new Advanced3D renderer for adding actual 3D objects to my videos for post-viz effects.

Nol Honig and Kyle Hamrick had a Leveling up in AE session, where they showed all sorts of shortcuts, as well unique ways to utilize Essential Properties, to create multiple varying copies of a single sub-comp. My favorite shortcuts were hitting ‘N’ while creating a rectangle mask sets its mode to None, which allows you to see the layer you are masking while you are drawing the rectangle. (Honestly it should default to None until you release the mouse button in my opinion.) Ctrl+Home will center objects in the comp, and even more useful, Ctrl+Alt+Home will re-center the anchor point if it gets adjusted by accident. But they skipped ‘U’ which reveals all keyframed properties, and when pressed again reveals all adjusted properties. (They apparently assumed everyone knew about the Uber-Key.)

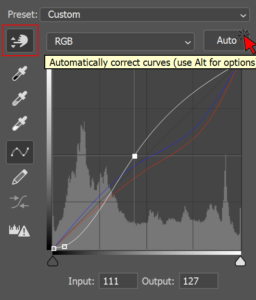

I also went to Rich Harrington’s Work Faster in Premiere Pro session, and while I didn’t learn too many new things about Premiere (Besides that copying the keyboard shortcuts to the Clipboard results in a readable text list), I did learn some cool things in Photoshop, that can be utilized in Premiere based workflows. Photoshop is able to export Look Up Tables, that can be used to adjust the color on images in Premiere Pro via the Lumetri color effects. It generates these lookup tables via adjustment layers applied to the image. Now while many of the same tools are available directly within Premiere, Photoshop does have some further options that Premiere does not, and this is how you can utilize them for video.  First you export a still of the shot you want to correct, and bring it into Photoshop as a background image. Then you apply adjustment layers, specifically curves in this case, which is a powerful tool that is not always intuitive to use. First off Alt-Clicking the ‘Auto’ button gives you more detailed options in a separate window I had never even seen. Then the top left button in the curves panel is the ‘Targeted Adjustment Tool,’ which allows you to modify the selected curve by clipping on the area of the image that you want to change, and it will adjust that point on the curve. (Hey Adobe, I want this in Lumetri.) But for now, you get your still looking as you want it to in Photoshop, and then export a Look-up table (LUT) for use in Premiere, or anywhere else you can use LUTs.

First you export a still of the shot you want to correct, and bring it into Photoshop as a background image. Then you apply adjustment layers, specifically curves in this case, which is a powerful tool that is not always intuitive to use. First off Alt-Clicking the ‘Auto’ button gives you more detailed options in a separate window I had never even seen. Then the top left button in the curves panel is the ‘Targeted Adjustment Tool,’ which allows you to modify the selected curve by clipping on the area of the image that you want to change, and it will adjust that point on the curve. (Hey Adobe, I want this in Lumetri.) But for now, you get your still looking as you want it to in Photoshop, and then export a Look-up table (LUT) for use in Premiere, or anywhere else you can use LUTs.

Creativity Park

Creativity Park

There is also a hall of booths from Adobe’s various hardware and software partners, some of whom have new announcements of their own. The hall was divided into sections, with a quarter of it devoted to video, which is more than previous years, in my observation. Samsung was showing off their ridiculously oversized wraparound LCD displays, in the form of the 57″ double wide UHD display, and the 55″ curved TV display that can be run in portrait mode for an overhead feel. I am still a strong proponent of the 21:9 aspect ratio, as that is the natural shape of human vision, and anything wider requires moving your head instead of your eyes.

Logitech was showing off their new Action Ring function for their MX line of productivity mice. I have been using gaming mice for the last few years, and after talking with some of the reps in the booth, I believe I should be migrating back to the professional options. The new Action Ring is similar to a feature in my Triathlon mouse, where you press a button to bring up a customizable context menu with various functions available. It is still in beta, but it has potential.

LucidLink is a high performance cloud storage provider which presents to the OS as a standard drive letter, and they were showing off a new integration with Premiere Pro, as a panel in the application that allows users to control which files maintain a local copy, based on which projects and sequences they are used in. I have yet to try LucidLink myself, as my bandwidth was too low until this year, but I can it envision being a useful tool now that I have a fiber connection at home.

Adobe Sneaks

In what is a staple event of the MAX conference, Adobe Sneaks is when the company’s engineers present technologies they are working on, which have not yet made it into specific products. The technologies range from Project Primrose, a digital dress, which can display patterns on black and white tiles, to automatically dubbing audio it multiple foreign languages via AI in Project Dub Dub Dub. The event was hosted by comedian Adam Levine, who offered some less technical observations about the new functions that were being showcased.

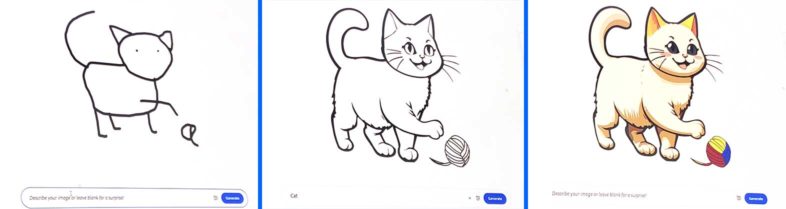

Illustration isn’t really my thing, but it could be once Project Draw & Delight comes to market. It uses the power of AI to convert the artistic masterpiece on the left into the refined images to the right, with the simple prompt “cat.” I am looking forward to how much better my story board sketches will soon look with this simple and accessible technology.

Illustration isn’t really my thing, but it could be once Project Draw & Delight comes to market. It uses the power of AI to convert the artistic masterpiece on the left into the refined images to the right, with the simple prompt “cat.” I am looking forward to how much better my story board sketches will soon look with this simple and accessible technology.

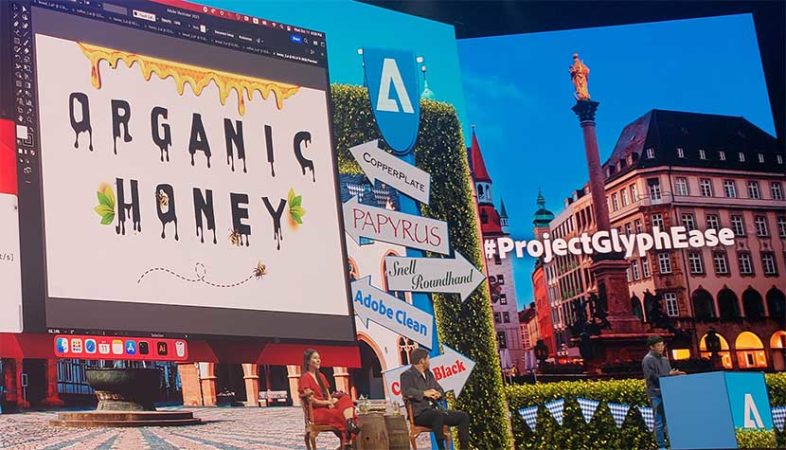

Adobe always plays a lot with fonts, and Project Glyph Ease continues that process with AI generated complete fonts, based on users drawing two or three letters. This is a natural extension of the new type editing features demonstrated in Illustrator the day before, where any font can be identified and matched from a couple letters, even from vectorized outlines. But this tool can create whole new fonts instead of matching existing ones.

Project See Through was all about removing reflections from photographs, and they did a pretty good job, on some complex scenes while preserving details. But the part that was really impressive was when they showed that the computer could also generate a full image of what the reflection was of. A little scary when you think about the fact that that frequently will be the photographer taking the photo. So much for the anonymity of being “behind the camera.”

Project See Through was all about removing reflections from photographs, and they did a pretty good job, on some complex scenes while preserving details. But the part that was really impressive was when they showed that the computer could also generate a full image of what the reflection was of. A little scary when you think about the fact that that frequently will be the photographer taking the photo. So much for the anonymity of being “behind the camera.”

Project Scene Change was a little rough in its initial presentation, but was a really powerful concept. It extracts a 3D representation of a scene from a piece of source footage, and then uses that to create a new background, for a different clip, but with the background rendered to match the perspective of the foreground clip. And it is not really limited to background, that is just the easiest way to explain it with words. As you can see the character in the scene behind the coffee cup, it really is creating an entire environment, not just a background. So it will be interesting to see how this is fleshed out with user controls, for higher scaled VFX processes.

Project Res Up appears to be capable of true AI based generative resolution improvements in video. I have been waiting for this ever since NVidia demonstrated live AI generated upscaling of 3D rendered images, which is what allows real-time ray tracing to work, but haven’t seen it in action until now. If we can create something out of thin air from generative AI, it stands to reason we should be able to improve something that already exists, but it in another sense I recognize it is more challenging when you have a specific target to match. This is also why generative video is much harder to do than stills, because each generated frame has to smoothly match the ones before and after it, and any artifacts will be much more noticeable to humans when in motion.

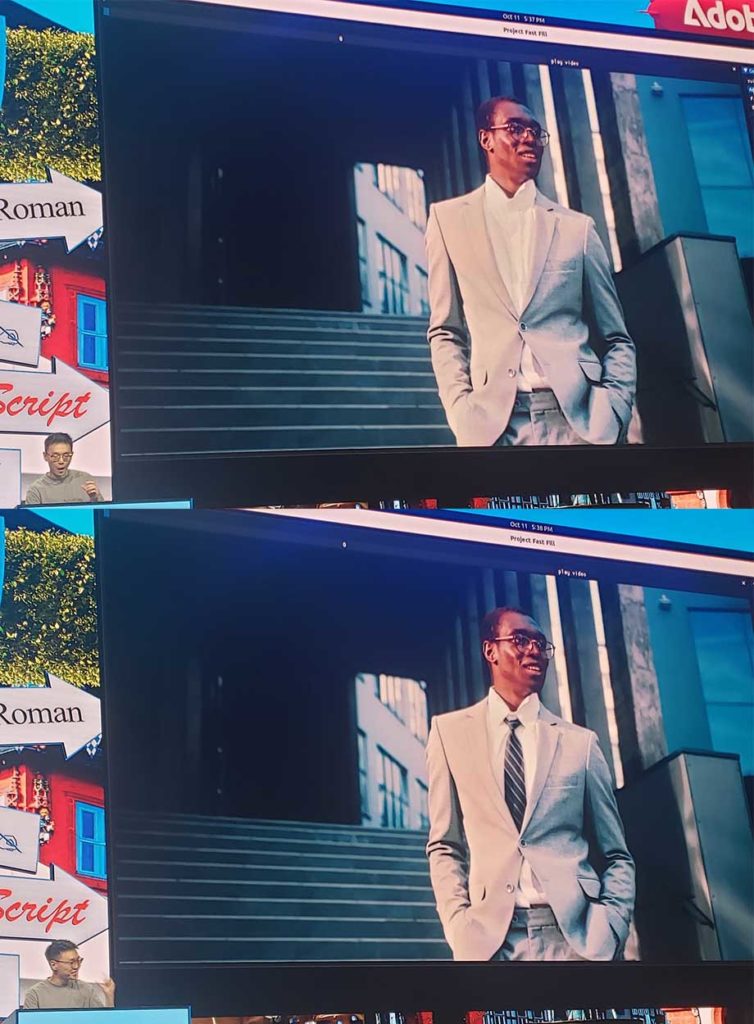

This is why the most powerful demo, by far from my perspective, was the AI based generative fill for video, called Project Fast Fill. This was something which I expected to see, but I did not anticipate it to be so powerful yet. It started off with a basic removal of distractions from elements in the background.

But ended with adding a neck-tie to a strutting character walking through a doorway with complex lighting changes and camera motion, based on a simple text command and a vector shape to point the AI in the right place. But the results were stunning enough to be hard to believe that this wasn’t ‘faked.” But assuming it wasn’t, it will revolutionize VFX much sooner than I expected.

MAX Bash

MAX Bash

The conference was topped off with a big party in a downtown parking lot. I am not much a music and dancing guy, but there were an impressive collection of food trucks and other culinary booths with all sorts of crazy things to try. I think my favorite was rather simple, and could be recreated easily at home, a crescent roll being cooked in a waffle iron, but it was very good. That said, I am a fan of this outdoor party thing, compared to the clubs in Vegas where a number of industry events are held during NAB. Not as loud, and lots of ways for Adobe to out their ‘Creative’ spin on the event. Anyhow, that wraps up my coverage of MAX, and hopefully gives readers a taste of what it would be like to attend in person, instead of just watching the event online for free, which is still an option.