I had the opportunity to attend GoPro’s launch event in San Francisco on Thursday for their new Hero6 and Fusion cameras. The Hero6 is the next logical step in their iteration of action cameras, increasing the supported frames rates to 4Kp60 and 1080p240, as well as adding integrated image stabilization. The Fusion on the other hand is a totally new product for them, as an action cam for 360 degree video. GoPro has developed a variety of other 360 degree video capture solutions in the past, based on rigs using many of their existing Hero cameras, but Fusion is their first integrated 360 video solution.

While the Hero6 is available immediately for $499, the Fusion is expected to ship in November for $699. While we got to see the Fusion and its footage, most of the hands-on aspects of the launch event revolved around the Hero6. Each of the attendees was provided a Hero6 kit to record the rest of the days events. My group was provided a ride on the RocketBoat through the San Francisco Bay. This adventure took advantage of a number of features of the camera, including the waterproofing, the slow motion, and the image stabilization. I have pieced together a quick highlight video of the various features below.

While the Hero6 is available immediately for $499, the Fusion is expected to ship in November for $699. While we got to see the Fusion and its footage, most of the hands-on aspects of the launch event revolved around the Hero6. Each of the attendees was provided a Hero6 kit to record the rest of the days events. My group was provided a ride on the RocketBoat through the San Francisco Bay. This adventure took advantage of a number of features of the camera, including the waterproofing, the slow motion, and the image stabilization. I have pieced together a quick highlight video of the various features below.

The big change within the Hero6 is the inclusion of GoPro’s new custom designed GP1 image processing chip. This allows them to process and encode higher frame rates, and allows for image stabilization at many frame-rate settings. The camera itself is physically similar to the previous generations, so all of your existing mounts and rigs will still work with it. It is an easy swap out to upgrade the Karma drone with the new camera, which also got a few software improvements. It can now automatically track the controller with the camera, to keep the user in the frame while the drone is following or stationary. It can also fly a circuit of ten way-points for repeatable shots, and overcoming a limitation I didn’t know existed, it can now look “up.”

There were fewer precise details about the Fusion. It is stated to be able to record a 5.2K video sphere at 30fps, and a 3K sphere at 60fps. This is presumably the circumference of the sphere in pixels, and therefore the width of an equirectangular output. That would lead us to conclude that the individual fish-eye recording be about 2600 pixels wide, plus a little overlap for the stitch. (GoPro’s David Newman details how the company arrives at 5.2K in this post.) The sensors are slightly laterally offset from one another, allowing the camera to be thinner, and decreasing the parallax shift at the side seams, but adding a slight offset at the top and bottom seams. If the camera is oriented upright, those seams are the least important areas in most shots. They also appear to have a good solution for hiding the camera support pole within the stitch, based on the demo footage they were showing. It will be interesting to see what effect the Fusion camera has on the “culture” of 360 video. It is not the first affordable 360 degree camera, but it will definitely bring 360 video capture to new places.

There were fewer precise details about the Fusion. It is stated to be able to record a 5.2K video sphere at 30fps, and a 3K sphere at 60fps. This is presumably the circumference of the sphere in pixels, and therefore the width of an equirectangular output. That would lead us to conclude that the individual fish-eye recording be about 2600 pixels wide, plus a little overlap for the stitch. (GoPro’s David Newman details how the company arrives at 5.2K in this post.) The sensors are slightly laterally offset from one another, allowing the camera to be thinner, and decreasing the parallax shift at the side seams, but adding a slight offset at the top and bottom seams. If the camera is oriented upright, those seams are the least important areas in most shots. They also appear to have a good solution for hiding the camera support pole within the stitch, based on the demo footage they were showing. It will be interesting to see what effect the Fusion camera has on the “culture” of 360 video. It is not the first affordable 360 degree camera, but it will definitely bring 360 video capture to new places.

A big part of the equation for 360 video is the supporting software to get the footage from the camera to the viewer in a usable way. GoPro had already acquired Kolor’s Autopano Video Pro a few years ago to support image stitching for their larger 360 video camera rigs. So certain pieces of the underlying software ecosystem to support 360 video workflow are already in place. The desktop solution for processing the 360 footage will be called Fusion Studio, and is listed as coming soon on their website. They have a pretty slick demonstration of flat image extraction from the video sphere, which they are marketing as “OverCapture.” This allows a cellphone to pan around the 360 sphere, which is pretty standard these days, but by recording that viewing in real-time, they can output standard flat videos from the 360 sphere. This is a much simpler and more intuitive approach to virtual cinematography that trying to control the view with angles and key-frames in a desktop app. This workflow should result in a very fish-eye flat video, similar to the more traditional GoPro shots, due to the similar lens characteristics. There are a variety of possible approaches to handling the fish-eye look. GoPro’s David Newman was explaining to me some of the solutions he has been working on to re-project GoPro footage into a sphere, to re-frame or alter the field of view in a virtual environment. Based on their demo reel, it looks like they also have some interesting tools coming for utilizing the unique functionality that 360 makes available to content creators, using various 360 projections for creative purposes within a flat video.

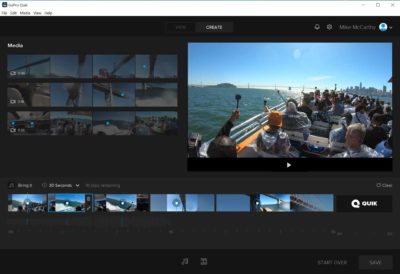

On the software front, GoPro has also been developing tools to help its camera users process and share their footage. One of the inherent issues of action camera footage, is that there is basically no trigger discipline. You hit record long before anything happens, and then get back to the camera after the event in question is over. I used to get 1 hour roll-outs that had 10 seconds of usable footage within them. The same is true when recording many attempts to do something before one of them succeeds. Remote control of the recording process has helped with this a bit, but regardless you end up with ton’s of extra footage that you don’t need. GoPro is working on software tools that use AI and machine learning to sort through your footage and find the best parts automatically. The next logical step is to start cutting together the best shots, which is what Quikstories in their mobile app is beginning to do. As someone who edits video for a living, and is fairly particular and precise, I have a bit of trouble with the idea of using something like that for my videos, but for someone to whom the idea of “video editing” is intimidating, this could be a good place to start, And once the tools get to a point where their output can be trusted, automatically sorting footage could make even very serious editing a bit easier, when there is a lot of potential material to get through. in the meantime though, I find their desktop tool Quik to be too limiting for my needs, and will continue to use Premiere to edit my GoPro footage, which is the response I believe they expect of any professional user.

There are also a variety of new camera mount options available, including small extendable tripod handles in two lengths, as well as a unique “Bite Mount” for POV shots. It includes a colorful padded float in case it pops out of your mouth while shooting in the water. The tripods are extra important for the forthcoming Fusion, to support the camera with minimal obstruction of the shot. And I wouldn’t recommend the using Fusion on the Bite Mount, unless you want a lot of head in the shot.

There are also a variety of new camera mount options available, including small extendable tripod handles in two lengths, as well as a unique “Bite Mount” for POV shots. It includes a colorful padded float in case it pops out of your mouth while shooting in the water. The tripods are extra important for the forthcoming Fusion, to support the camera with minimal obstruction of the shot. And I wouldn’t recommend the using Fusion on the Bite Mount, unless you want a lot of head in the shot.

Ironically, as someone who has processed and edited hundreds of hours of GoPro footage, and even worked for GoPro for a week on paper, (as an NAB demo artist for Cineform during their acquisition) I don’t think I had ever actually used a GoPro camera. The fact that we were all handed new cameras with zero instructions, and expected to go out and shoot is a testament to how confident GoPro is that their products are easy to use. I didn’t have any difficulty with it, but the engineer within me wanted to know the details of the settings I was adjusting. Bouncing around with water hitting you in the face is not the best environment for learning how to do new things, but I was able to utilize pretty much every feature the camera had to offer during that ride with no prior experience. (Obviously i have extensive experience with video, just not with GoPro usage.) And I was pretty happy with the results. Now I want to take it sailing, skiing, and other such places, just like a “normal” GoPro user.