I had the opportunity to attend Adobe’s MAX conference at the LA Convention Center this week. Adobe showed me and 15K of my closest friends the newest updates to pretty much all of their Creative Cloud applications, as well as a number of interesting upcoming developments. From a post perspective, the most significant pieces of news are the release of Premiere Pro 14, and After Effects 17 (AKA the 2020 releases of those Creative Cloud apps).

The main show runs from Monday to Wednesday, with a number of pre-show seminars and activities the preceding weekend. My experience started off by attending a screening of the new Terminator Dark Fate film at LA Live, followed by Q&A with the director and post team. Terminator was edited in Premiere Pro, sharing the project assets between a large team of editors and assistants, with extensive use of After Effects, Adobe’s newly acquired Substance app, and various other tools in the Creative Cloud. The post team extolled the improvements in shared project support and project opening times since their last Premiere endeavor on Marvel’s Deadpool. And the Visual Effects Editor Jon Carr shared how they used the integration between Premiere and After Effects to facilitate rapid generation of temporary “post-viz” effects, to help the editors tell the story while they were waiting on the VFX teams to finish generating the final CGI characters and renders.

The main show runs from Monday to Wednesday, with a number of pre-show seminars and activities the preceding weekend. My experience started off by attending a screening of the new Terminator Dark Fate film at LA Live, followed by Q&A with the director and post team. Terminator was edited in Premiere Pro, sharing the project assets between a large team of editors and assistants, with extensive use of After Effects, Adobe’s newly acquired Substance app, and various other tools in the Creative Cloud. The post team extolled the improvements in shared project support and project opening times since their last Premiere endeavor on Marvel’s Deadpool. And the Visual Effects Editor Jon Carr shared how they used the integration between Premiere and After Effects to facilitate rapid generation of temporary “post-viz” effects, to help the editors tell the story while they were waiting on the VFX teams to finish generating the final CGI characters and renders.

The conference itself kicked off with a keynote presentation of all of Adobe’s new developments and releases. The 150 minute presentation covered all aspects of the company’s extensive line of applications. “Creativity for All” is the primary message Adobe is going for, and they focused on the tension between creativity and time. So they are trying to improve their products in ways that give their users more time to be creative. The three prongs of that approach for this iteration of updates were:

The conference itself kicked off with a keynote presentation of all of Adobe’s new developments and releases. The 150 minute presentation covered all aspects of the company’s extensive line of applications. “Creativity for All” is the primary message Adobe is going for, and they focused on the tension between creativity and time. So they are trying to improve their products in ways that give their users more time to be creative. The three prongs of that approach for this iteration of updates were:

Faster, more powerful, more reliable (Fixing time-wasting bugs, improving hardware utilization)

Create anywhere, anytime, with anyone (Adding functionality via iPad, and shared Libraries for collaboration)

Explore new frontiers (Specifically in 3D with Dimension, Substance, and Aero) Education is also an important focus for Adobe, with 15 Million copies of CC in use in education around the world. They are also creating a platform for CC users to stream their working process to viewers who want to learn from them, directly from within the applications. That will probably integrate with the new expanded Creative Cloud app released last month. And they also have released integration for Office apps to access assets in CC libraries.

Education is also an important focus for Adobe, with 15 Million copies of CC in use in education around the world. They are also creating a platform for CC users to stream their working process to viewers who want to learn from them, directly from within the applications. That will probably integrate with the new expanded Creative Cloud app released last month. And they also have released integration for Office apps to access assets in CC libraries.

The first actual application updates they showed off were in Photoshop. They have made the new locked aspect ratio scaling a toggle-able behavior, improved the warp tool, and improved ways to navigate deep layer stacks by seeing which layers effect particular parts of an image. But the biggest improvement is AI based object selection, that makes detailed maskings based on simple box selections or rough lassos. Illustrator now has GPU acceleration, improving performance of larger documents, and a path simplifying tool to reduce the number of anchor points.

They released Photoshop for iPad, and announced that Illustrator is coming to iPad as well. Fresco is headed the other direction, now available on Windows. That is currently limited to Microsoft Surface products, but I look forward to being able to try it out on my ZBook-X2 at some point. Adobe XD has new features, and apparently is the best way to move complex Illustrator files into After Effects, which I learned at one of the sessions later.

They released Photoshop for iPad, and announced that Illustrator is coming to iPad as well. Fresco is headed the other direction, now available on Windows. That is currently limited to Microsoft Surface products, but I look forward to being able to try it out on my ZBook-X2 at some point. Adobe XD has new features, and apparently is the best way to move complex Illustrator files into After Effects, which I learned at one of the sessions later.

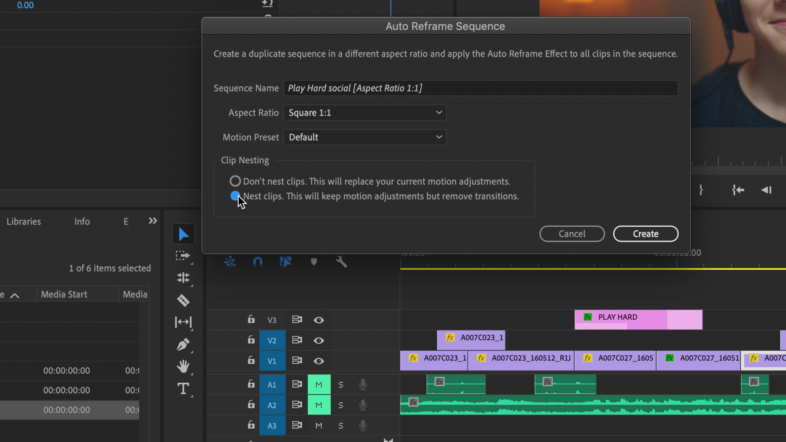

Premiere Pro 14 has a number of new features, the most significant one being AI driven automatic reframe, to allow you to automatically convert your edited project into other aspect ratios, for various deliverables. 16×9 is obviously a standard size, but certain web platforms are optimized for square or tall videos. The feature can also be used to reframe content for 2.35 to 16×9 or 4×3, which are frequent delivery requirements for feature films that I work on. My favorite aspect of this new functionality, is that the user has complete control over the results. Unlike other automated features like warp stabilizer, which only offer on/off of applying the results, the auto-frame function just generates motion effect keyframes, that can be further edited and customized by the user, once the initial AI pass is complete. It also has a nesting feature for retaining existing framing choices, that results in the creation of a new single layer source sequence, which I can envision being useful for a number of other workflow processes. (Preparing for external color grading or texturing passes, etc.)

They also added better support for multi-channel audio workflows and effects, improved playback performance for many popular video formats, better HDR export options, and a variety of changes to make the Motion Graphics tools more flexible and efficient for users who use them extensively. They also increased the range of values available for clip playback speed and volume, and added support for new camera formats and derivations.

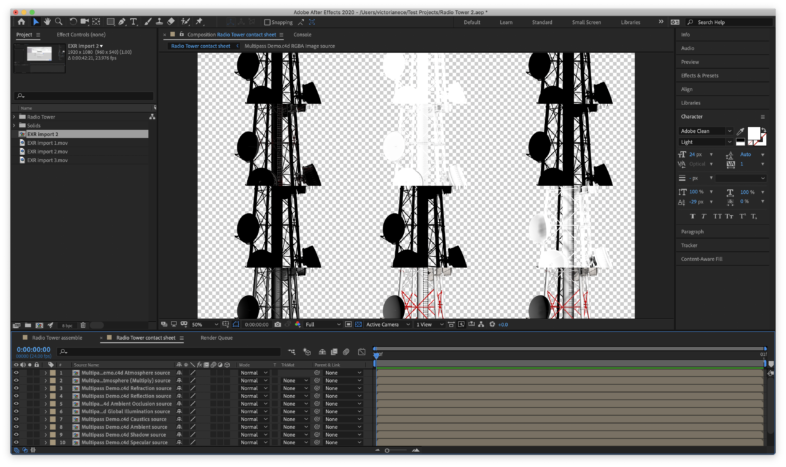

After Effects has focused on improving playback and performance for this release, and have made some significant improvements in that regard. The other big feature that actually may make a difference is content-aware fill for video. This was sneak previewed at Adobe MAX last year, and first implemented in the NAB 2019 release of After Effects, but it should be greatly refined and improved in this version, being twice as fast as well. They also greatly improved support for OpenEXR frame sequences, especially with multiple render pass channels. The channels can be labeled; it creates a video contact sheet for viewing all the layers in thumbnail form. EXR playback performance is supposed to be greatly improved as well.

Character Animator is now at 3.0, and they have add keyframing of all editable values, trigger-able reposition “cameras” and trigger-able audio effects, among other new features. And Adobe Rush now supports publishing directly to TikTok.

Outside of individual applications, Adobe has launched the Content Authenticity Initiative in partnership with the NY Times and Twitter. It aims to fight fake news and restore consumer confidence in media. Its three main goals are Trust, Attribution and Authenticity. It aims to present end users with attribution to who created an image, and who edited or altered it, and if so, in what ways. Seemingly at odds with that, they also released a new mobile app that edits images upon capture, using AI empowered “lenses” for highly stylized looks, even providing a live view.

This opening keynote was followed by a selection of over 200 different labs and sessions available over the next three days. I attended a couple sessions focused on After Effects, as that is a program I know I don’t utilize to its full capacity. (Does anyone, really?)

A variety of other partner companies were showing off their products in the community pavilion. HP was pushing 3D printing and digital manufacturing tools that integrate with Photoshop and Illustrator. Dell has a new 27″ color accurate monitor with built-in colorimeter, presumably to compete with HP’s top end Dreamcolor displays. Asus also has some new HDR monitors that are Dolby Vision compatible. One is designed to be portable, and is as thin and lightweight as a laptop screen, and I have always wondered why that wasn’t a standard approach for desktop displays.

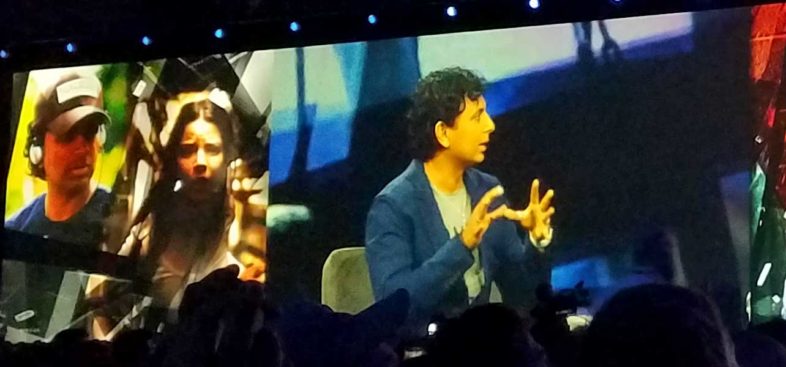

Tuesday opened with a keynote presentation from a number of artists of different types, speaking or being interviewed. Jason Levine’s talk with M. Night Shyamalan was my favorite part, even though thrillers aren’t really my cup of tea. Later, I was able to sit down and talk with Patrick Palmer, Principle Product Manager for Editing at Adobe, about where Premiere is headed, and the challenges of developing HDR creation tools when there is no unified set of standards for final delivery. But I am looking forward to being able to view my work in HDR while I am editing at some point in the future.

One of the highlights of MAX is the 90 minute Sneaks session on Tuesday night, where comedian John Mulvaney ‘helped’ a number of Adobe researchers demonstrate new media technologies they are working on. These will eventually improve audio quality, automate animation, analyze photographic authenticity, and many other tasks, once they are refined into final products at some point in the future.

One of the highlights of MAX is the 90 minute Sneaks session on Tuesday night, where comedian John Mulvaney ‘helped’ a number of Adobe researchers demonstrate new media technologies they are working on. These will eventually improve audio quality, automate animation, analyze photographic authenticity, and many other tasks, once they are refined into final products at some point in the future.

This was only my second time attending MAX, and with Premiere Rush being released last year, video production was a key part of that show. This year, without that factor, it was much more apparent to me that I was an engineer attending an event catering to designers. Not that this is bad, but I mention it here because it is good to have a better idea of what you are stepping into when you are making decisions about whether to invest in attending a particular event.

This was only my second time attending MAX, and with Premiere Rush being released last year, video production was a key part of that show. This year, without that factor, it was much more apparent to me that I was an engineer attending an event catering to designers. Not that this is bad, but I mention it here because it is good to have a better idea of what you are stepping into when you are making decisions about whether to invest in attending a particular event.

Adobe focuses MAX on artists and creatives, as opposed to engineers and developers, who have other events that are more focused on their interests and needs. I suppose that is understandable since it is not branded ‘Creative Cloud’ for nothing. But it is always good to connect with the people who develop the tools I use, and the others who use them with me, which is a big part of what Adobe MAX is all about.