This week was NVidia’s GTC conference, and they had a number of interesting announcements. Most relevant in the M&E space, are the new Ada Lovelace based GPUs. To accompany the existing RTX 6000, there is now a new RTX4000 small form factor, as well as 5 new mobile GPUs offering various levels of performance and power usage.

The new mobile options all offer performance improvements that exceed the next higher tier in the previous generation, so the new 2000 Ada is as fast as the previous A3000, and the new 4000 Ada exceeds the previous top end A5500, and the new mobile RTX 5000 Ada chip with 9728 CUDA cores and 42 Teraflops of single precision compute performance, should outperform the previous A6000 DESKTOP card or the GeForce 3090Ti. So if true, that is pretty impressive, although no word on battery life.

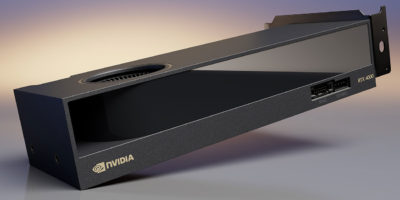

The new RTX 4000 small form factor Ada takes the performance of the previous A4000 GPU, ups the memory buffer to 20GB, and fits it into the form factor of the previous A2000 card, a low profile dual slot PCIe card that only uses the 75Watts from the PCIe bus. This allows it to be installed in small form factor PCs, or in 2U servers, that don’t have full height slots, nor have PCIe power connectors that most powerful GPUs require. Strangely it is lower performance, at least on paper, than the new Mobile 4000, with 20% fewer cores, and 40% lower peak performance, if the specs I was given are correct, possibly due to power limitations of the 75W PCIe bus slot. So the naming conventions across the various product lines continue to get more confusing and less informative, which I am never a fan of. My recommendation is to call them the Ada19 or Ada42 based on the peak Teraflops, so it is easy to see how they compare, even over generations against the Turing8 or the Ampere24. This should work for at least for the next 4-5 generations, until we reach Peta-Flops, when the numbering will need to be reset again.

The new RTX 4000 small form factor Ada takes the performance of the previous A4000 GPU, ups the memory buffer to 20GB, and fits it into the form factor of the previous A2000 card, a low profile dual slot PCIe card that only uses the 75Watts from the PCIe bus. This allows it to be installed in small form factor PCs, or in 2U servers, that don’t have full height slots, nor have PCIe power connectors that most powerful GPUs require. Strangely it is lower performance, at least on paper, than the new Mobile 4000, with 20% fewer cores, and 40% lower peak performance, if the specs I was given are correct, possibly due to power limitations of the 75W PCIe bus slot. So the naming conventions across the various product lines continue to get more confusing and less informative, which I am never a fan of. My recommendation is to call them the Ada19 or Ada42 based on the peak Teraflops, so it is easy to see how they compare, even over generations against the Turing8 or the Ampere24. This should work for at least for the next 4-5 generations, until we reach Peta-Flops, when the numbering will need to be reset again.

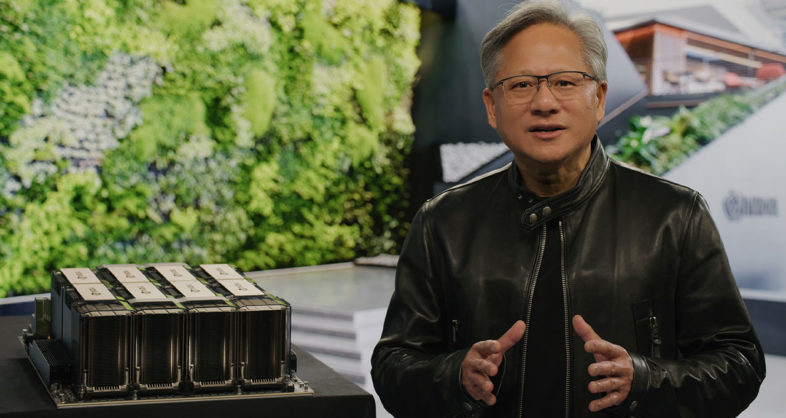

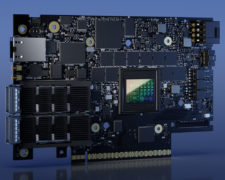

There are also new announcements targeted at supercomputing and datacenters. The Hopper GPU is focused on AI and large language model acceleration, usually installed in sets of 8 SXM modules in a DGX server. They also have their previously announced Grace Chip in production, as their new ARM based CPU. And they offer these chips as dual CPUs processing boards, or combined as an integrated Grace-Hopper super chip with shared interface bus and memory between the CPU and GPU. The new Apple Silicon processors use the same unified memory approach.

There are also new announcements targeted at supercomputing and datacenters. The Hopper GPU is focused on AI and large language model acceleration, usually installed in sets of 8 SXM modules in a DGX server. They also have their previously announced Grace Chip in production, as their new ARM based CPU. And they offer these chips as dual CPUs processing boards, or combined as an integrated Grace-Hopper super chip with shared interface bus and memory between the CPU and GPU. The new Apple Silicon processors use the same unified memory approach.

There are also new PCIe based accelerator cards, starting with the H100 NVL, which is Hopper architecture in a PCIe card, with 94GB memory for transformation processing. ‘Transformation’ is the ‘T’ in ChatGPT. There are also Lovelace architecture based options, including the single slot L4 for AI video processing, the dual slot L40 for generative AI content generation.

There are also new PCIe based accelerator cards, starting with the H100 NVL, which is Hopper architecture in a PCIe card, with 94GB memory for transformation processing. ‘Transformation’ is the ‘T’ in ChatGPT. There are also Lovelace architecture based options, including the single slot L4 for AI video processing, the dual slot L40 for generative AI content generation.  Four of these L40 cards are included in the new OVX-3 servers, designed for hosting and streaming Omniverse data and applications. These new servers from various vendors will have options for either Intel Sapphire Rapids or AMD Genoa based platforms, and will include the new Bluefield-3 DPU cards, ConnectX-7 NICs. And they will be also available in a predesigned Superpod of 64 servers and a Spectrum-3 switch, for companies that have a lot of 3D assets to deal with.

Four of these L40 cards are included in the new OVX-3 servers, designed for hosting and streaming Omniverse data and applications. These new servers from various vendors will have options for either Intel Sapphire Rapids or AMD Genoa based platforms, and will include the new Bluefield-3 DPU cards, ConnectX-7 NICs. And they will be also available in a predesigned Superpod of 64 servers and a Spectrum-3 switch, for companies that have a lot of 3D assets to deal with.

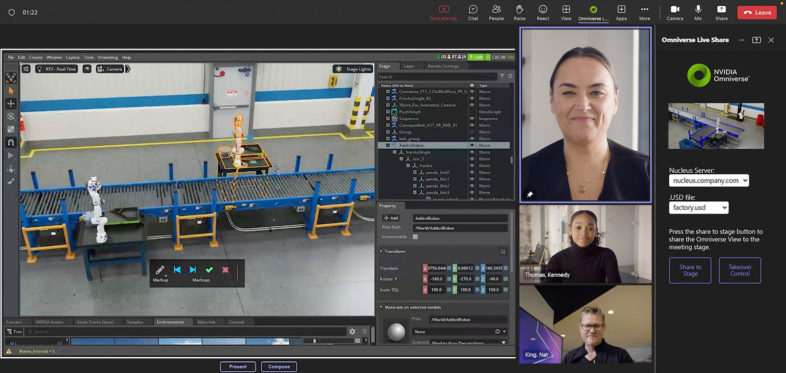

On the software side, Omniverse has a variety of new applications that support its popular USD data format for easier interchange, and it now supports their real-time ray-traced sub-surface scattering shader (Maybe RTRTSSSS for short?) for more realistic surfaces. NVidia is also partnering closely with Microsoft, to bring Omniverse to Azure, and to MS 365, which will allow MS Teams users to collaboratively explore 3D worlds together during meetings.

NVidia Picasso is now available to developers like Adobe, using generative AI to convert text into images, videos, or 3D objects. So in the very near future we will reach a point where we can no longer trust the authenticity of any image or video that we see online. It is not difficult to see where that might lead us. One way or another, it will be much easier to add artificial elements to images, videos, and 3D models. Maybe I will finally get into Omniverse myself when I can just tell it what I have in mind, and it creates a full 3D world for me. Or maybe I just need it to add a helicopter into my footage for a VFX shot, with the right speed and perspective. That would be helpful.

Some of the new AI developments can be concerning from a certain perspective, but hopefully these new technologies can be harnessed to effectively improve our experience working, and our final output. And NVidia’s products are definitely accelerating the development and implementation of AI across the board.