NVidia is releasing an updated generation of GeForce video cards based on their new Ampere architecture. NVidia also shared a number of new software developments they have been working on. Some are available now, and others are coming soon. The first three cards in the GeForce RTX 30-Series are the 3070, 3080, and 3090. The cards have varying numbers and CUDA cores and amounts of video memory, but strongly outperform the cards they are replacing. They all support the PCIe 4.0 bus standard, for greater bandwidth to the host system. They also support HDMI 2.1 output, to drive displays at 8Kp60 with a single cable, and can encode and decode at 8K resolutions. I have had the opportunity to try out the new GeForce RTX 3090 in my system, and am excited by the potential that it brings to the table.

NVidia originally announced their Ampere GPU architecture back at GTC in April, but it was initially only available for supercomputing customers. Ampere has second generation RTX cores, and 3rd generation Tensor cores, while having double as many CUDA cores per streaming multi-processer (SM) leading to some truly enormous core counts. These three new GeForce graphics cards improve on the previous Turing generation products by a significant margin, both due to increased cores and video memory, and software developments improving efficiency.

NVidia originally announced their Ampere GPU architecture back at GTC in April, but it was initially only available for supercomputing customers. Ampere has second generation RTX cores, and 3rd generation Tensor cores, while having double as many CUDA cores per streaming multi-processer (SM) leading to some truly enormous core counts. These three new GeForce graphics cards improve on the previous Turing generation products by a significant margin, both due to increased cores and video memory, and software developments improving efficiency.

The biggest change to improve 3D render performance is the second generation of DLSS (Deep Learning Super Sampling). Basically NVidia discovered that it was more efficient to use AI to guess the value of a pixel than to actually calculate it from scratch. DLSS 2.0 runs on the Tensor cores, leveraging deep learning to increase the detail level of a rendered image, by increasing it’s resolution via intelligent interpolation. It is basically the opposite of Dynamic Super Sampling (DSR) which was to render higher resolution frames, and scale them down to fit a display’s native resolution, potentially improving image quality. DLSS usually runs by increasing the resolution by a factor of four, for example generating a UHD frame from an HD render, but now supports running by a factor of nine, generating an 8K output image from a 2560×1440 render. While this can improve performance in games, especially at high display resolutions, DLSS can also be leveraged to improve the interactive experience in 3D modeling and animation applications.

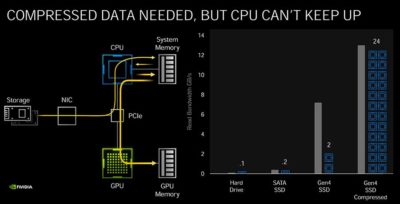

NVidia also announced details for another under the hood technology they call RTX IO which will allow the GPU to directly access data in storage, bypassing the CPU and system memory. It will use Microsoft’s upcoming DirectStorage API to do this at an OS level. This frees those CPU and host system resources for other tasks, and should dramatically speed up scene or level loading times. They are talking about 3D scenes and game levels opening up 5-10 times faster, when they decompress data from storage directly on the GPU. If this technology can be used to decompress video files directly from storage, that could help video editors as well. They also have RTXGL, which is accelerated Global Illumination running on RTX cores. The new 2nd generation RTX cores also have new support for rendering motion blur far more efficiently than it has been in the past.

NVidia also announced details for another under the hood technology they call RTX IO which will allow the GPU to directly access data in storage, bypassing the CPU and system memory. It will use Microsoft’s upcoming DirectStorage API to do this at an OS level. This frees those CPU and host system resources for other tasks, and should dramatically speed up scene or level loading times. They are talking about 3D scenes and game levels opening up 5-10 times faster, when they decompress data from storage directly on the GPU. If this technology can be used to decompress video files directly from storage, that could help video editors as well. They also have RTXGL, which is accelerated Global Illumination running on RTX cores. The new 2nd generation RTX cores also have new support for rendering motion blur far more efficiently than it has been in the past.

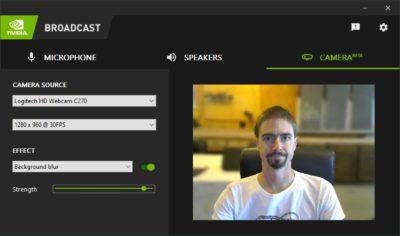

Nvidia Reflex is a new technology for measuring and reducing gaming lag, in part by passing the mouse data through the display, to compare user’s movements to changes on screen. Omniverse Machinima is a toolset aimed at making it easier for users to utilize 3D gaming content to create cinematic stories. It includes tools for animating character face and body poses, as well as RTX accelerated environmental editing and rendering. NVidia Broadcast is software tool that utilizes deep learning to improve your webcam stream, with AI powered background noise reduction, and AI subject tracking or automated background replacement, among other features. The software creates a virtual device for the improved stream, allowing it to be utilized by nearly any existing streaming application. Combining it with NDI Tools and/or OBS Studio could lead to some very interesting streaming workflow options, and it is available now, if you have an RTX card.

Nvidia Reflex is a new technology for measuring and reducing gaming lag, in part by passing the mouse data through the display, to compare user’s movements to changes on screen. Omniverse Machinima is a toolset aimed at making it easier for users to utilize 3D gaming content to create cinematic stories. It includes tools for animating character face and body poses, as well as RTX accelerated environmental editing and rendering. NVidia Broadcast is software tool that utilizes deep learning to improve your webcam stream, with AI powered background noise reduction, and AI subject tracking or automated background replacement, among other features. The software creates a virtual device for the improved stream, allowing it to be utilized by nearly any existing streaming application. Combining it with NDI Tools and/or OBS Studio could lead to some very interesting streaming workflow options, and it is available now, if you have an RTX card.

The New GeForce Cards

The three new cards are available from NVidia as Founder’s Edition products, and from other GPU vendors with their own unique designs and variations. The founder’s edition cards have a consistent visual aesthetic, which is strongly driven by their thermal dissipation requirements. The GeForce RTX 3070 will launch next month for $500, and has a fairly traditional cooling design, with two fans blowing on the circuit board. The 3070 offers 5888 CUDA cores and 8GB DDR6 RAM, offering a huge performance increase over the previous generation’s RTX 2070 with 2560 cores. With the help of DLSS and other developments, NVidia claims that the 3070’s render performance in many tasks is on par with the RTX2080Ti which costs over twice as much.

The three new cards are available from NVidia as Founder’s Edition products, and from other GPU vendors with their own unique designs and variations. The founder’s edition cards have a consistent visual aesthetic, which is strongly driven by their thermal dissipation requirements. The GeForce RTX 3070 will launch next month for $500, and has a fairly traditional cooling design, with two fans blowing on the circuit board. The 3070 offers 5888 CUDA cores and 8GB DDR6 RAM, offering a huge performance increase over the previous generation’s RTX 2070 with 2560 cores. With the help of DLSS and other developments, NVidia claims that the 3070’s render performance in many tasks is on par with the RTX2080Ti which costs over twice as much.

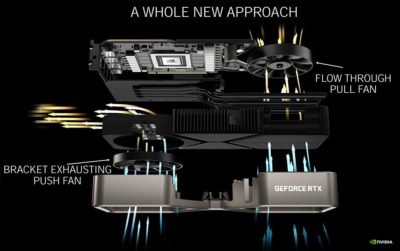

The new GeForce RTX 3080 is NVidia’s flagship ‘gaming’ card, with 8704 CUDA cores, and 10GB of memory DDR6X for $700. It should offer 50% more processing than the upcoming RTX 3070, and double the previous RTX 2080’s performance. Its 320 watts TPD is high, requiring a new board design for better airflow and ventilation. The resulting PCB is half the size of the previous RTX 2080, allowing air to flow through the card for more effective cooling. But the resulting product is still huge, mostly heat pipes, fins and fans.

The new GeForce RTX 3080 is NVidia’s flagship ‘gaming’ card, with 8704 CUDA cores, and 10GB of memory DDR6X for $700. It should offer 50% more processing than the upcoming RTX 3070, and double the previous RTX 2080’s performance. Its 320 watts TPD is high, requiring a new board design for better airflow and ventilation. The resulting PCB is half the size of the previous RTX 2080, allowing air to flow through the card for more effective cooling. But the resulting product is still huge, mostly heat pipes, fins and fans.

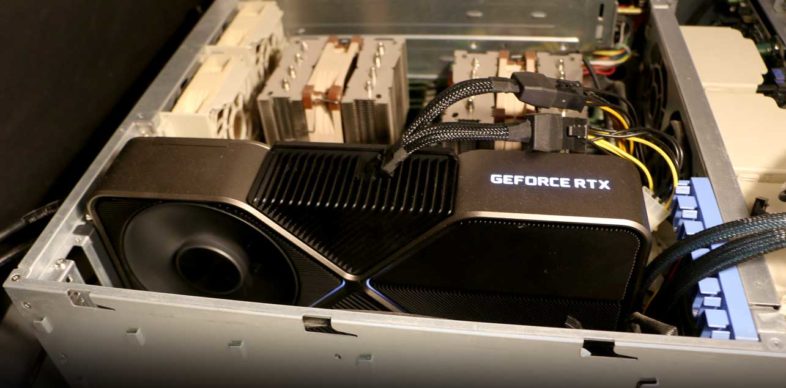

Lastly the GeForce RTX 3090 occupies a unique spot in the lineup. At $1500, It exceeds the Titan RTX in every way, with 10,486 CUDA cores, and 24GB of DDR6X memory, for 60% of the cost. It is everything the 3080 is, but scaled up. The same compressed board shape to allow for the unique airflow design, in a 3-slot package that is an inch taller than a full height card. This allows it to dissipate up to 350W of power. Its size means you need to ensure that is can physically fit in your system case, or design a new system around it. It is primarily targeted at content creators, optimized for high resolution image processing, be that gaming at 8K, encoding and streaming at 8K, editing 8K video, or rendering massive 3D scenes. The 3090 is also the only one of the new GeForce cards that allows for NVLink to harness the power of two cards together, but that has been falling out of favor with many users anyway, as individual GPUs increase in performance so much with each new generation.

It is primarily targeted at content creators, optimized for high resolution image processing, be that gaming at 8K, encoding and streaming at 8K, editing 8K video, or rendering massive 3D scenes. The 3090 is also the only one of the new GeForce cards that allows for NVLink to harness the power of two cards together, but that has been falling out of favor with many users anyway, as individual GPUs increase in performance so much with each new generation.

All of the cards offer three DisplayPort1.4 outputs, and a single HDMI 2.1 port. They offer hardware decode of the new AV1 video codec up to 8K resolution, and encode and decode of H.264 and HEVC, including screen capture and livestreaming of 8K HDR content through ShadowPlay. All three utilize a new 12 pin PCIe power connector, which can be adapted from dual 8 pin connectors for the time being, but expect to see these new plugs on power supplies in the future.

My Initial Tests

I was excited to recently receive a new GeForce RTX 3090 to test out, primarily for 8K and HDR workflows. I also will be reviewing a new workstation in the near future, which will pair well with this top end card, but have not received that system yet, and wanted to get my readers some immediate initial results. I will follow up with more extensive benchmarking comparing this card to the 2080Ti and P6000 next month, once I have that system to test with. So I set about installing the 3090 into my existing workstation, which was a bit of a challenge. I had to rearrange all of my cards, and remove some, to free up three adjacent PCIe slots. I also had to adapt some power cables to get the two 8pin PCIe plugs I needed for the included adapter to connect to the new 12pin mini plug. Everything I did seems to work fine for the time being, but I can’t close the top of the 3U rack mount case. (One more reason besides airflow to always opt for the 4U option.) Since case enclosures are important for proper cooling, I added a large fan over the whole open system.

I had already installed the newest Studio Driver, which worked with my existing Quadro cards, as well as the new GeForce cards. That is one of the many benefits of the new unified Studio Drivers, I don’t have to change drivers when switching between GeForce and Quadro cards for comparison benchmarks. I am testing on my Dell UP3218K monitor, running at 8K on two DisplayPort cables, and while 8K is always impressive, this config has been supported by NVidia since the Pascal generation, so this part is hardly news.

Software Benchmark Tests

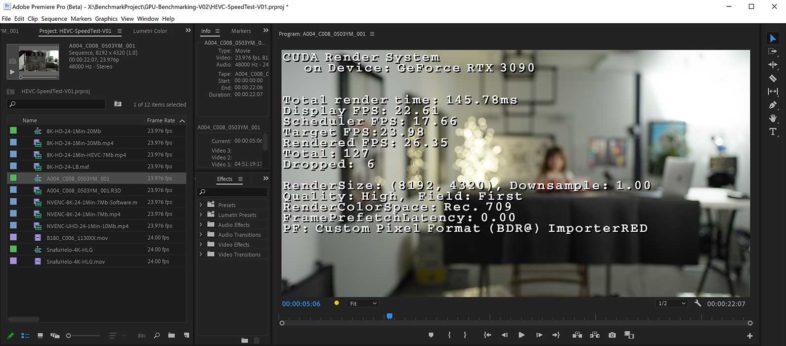

My first test was in Premiere Pro, where I discovered that new architectures make a significant difference when you access the CPU as deep as Adobe does, so I had to use the newest public beta version of Premiere Pro to get playback working with CUDA enabled. The newest beta version of Premiere Pro also supports GPU accelerated video decoding, which is great, but it make it hard to tell what speed improvements are from their software optimizations, and which are from this new hardware. Playback performance can be hard to quantify compared to render times, but Ctrl+Alt+F11 enables some onscreen information that gives Premiere users some insight into how their system is behaving during playback, combined with the dropped frame indicator, and 3rd party hardware monitoring tools.  I got smooth Premiere playback of 4K HDR material with high quality playback enabled. which is necessary to avoid banding. 8K sequences played back well at half res, but full resolution was hit or miss, depending on how much I started and stopped playback. Playing back long clips worked well, but stopping and resuming playback quickly usually led to dropped frames and hiccupping playback. Full res 8K playback is only useful if you have an 8K display, which I do. But 8K content can be edited at lower display resolution if necessary. Basically my current system is sufficient for 4K HDR editing, but to really push this card to its full potential for smooth 8K editing, I need to put it in a system that isn’t 7 years old.

I got smooth Premiere playback of 4K HDR material with high quality playback enabled. which is necessary to avoid banding. 8K sequences played back well at half res, but full resolution was hit or miss, depending on how much I started and stopped playback. Playing back long clips worked well, but stopping and resuming playback quickly usually led to dropped frames and hiccupping playback. Full res 8K playback is only useful if you have an 8K display, which I do. But 8K content can be edited at lower display resolution if necessary. Basically my current system is sufficient for 4K HDR editing, but to really push this card to its full potential for smooth 8K editing, I need to put it in a system that isn’t 7 years old.

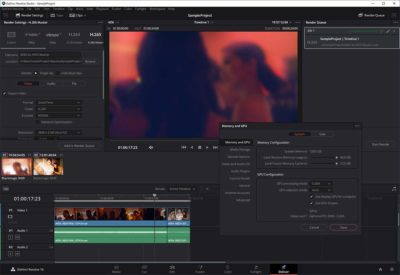

DaVinci Resolve also benefits from the new hardware, especially the increased RAM, for things like image de-noising and motion blur at larger frame sizes, which fail without enough memory on lower end cards. While technically previous generation Quadro cards have offered 24GB RAM, they were at much higher price points than the $1500 GeForce 3090, making it the budget friendly option from this perspective. And the processing cores help as well, as NVidia reports a 50% decrease in render times for certain complex effects, which is a better improvement than they claim for similar Premiere Pro export processes.

DaVinci Resolve also benefits from the new hardware, especially the increased RAM, for things like image de-noising and motion blur at larger frame sizes, which fail without enough memory on lower end cards. While technically previous generation Quadro cards have offered 24GB RAM, they were at much higher price points than the $1500 GeForce 3090, making it the budget friendly option from this perspective. And the processing cores help as well, as NVidia reports a 50% decrease in render times for certain complex effects, which is a better improvement than they claim for similar Premiere Pro export processes.

RedcineX Pro required a new beta update to utilize the new card’s CUDA acceleration. The existing version does support the RTX 3090 in OpenCL mode, but CUDA support in the new version should be faster. I was able to convert an 8K anamorphic R3D file to UHD2 ProRes HDR, which had repeatedly failed due to memory errors on my previous 8GB Quadro P4000, so the extra memory does help. My 8K clip that takes 30 minutes to transcode in CPU mode, and just over a minute with the RTX 3090’s CUDA acceleration.

RedcineX Pro required a new beta update to utilize the new card’s CUDA acceleration. The existing version does support the RTX 3090 in OpenCL mode, but CUDA support in the new version should be faster. I was able to convert an 8K anamorphic R3D file to UHD2 ProRes HDR, which had repeatedly failed due to memory errors on my previous 8GB Quadro P4000, so the extra memory does help. My 8K clip that takes 30 minutes to transcode in CPU mode, and just over a minute with the RTX 3090’s CUDA acceleration.

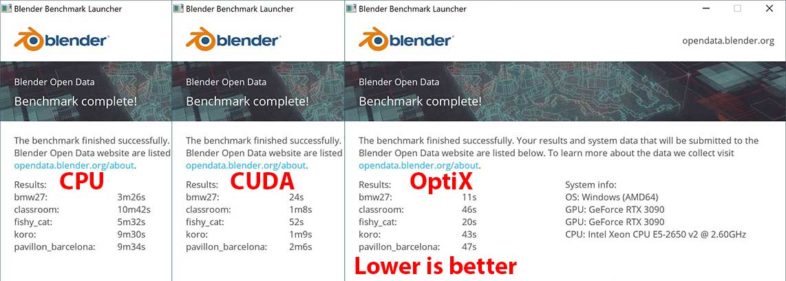

Blender is an open source 3D program for animation and rendering, which benefits from CUDA, the Optics SDK, and the new RTX motion blurring functionality. Blender has its own benchmarking tool, and my results were much faster with Optics enabled. I have not tested with Blender in the past, so I don’t have previous benchmarks to compare to, but it is pretty clear that having a powerful GPU makes a big difference here, compare to CPU rendering.

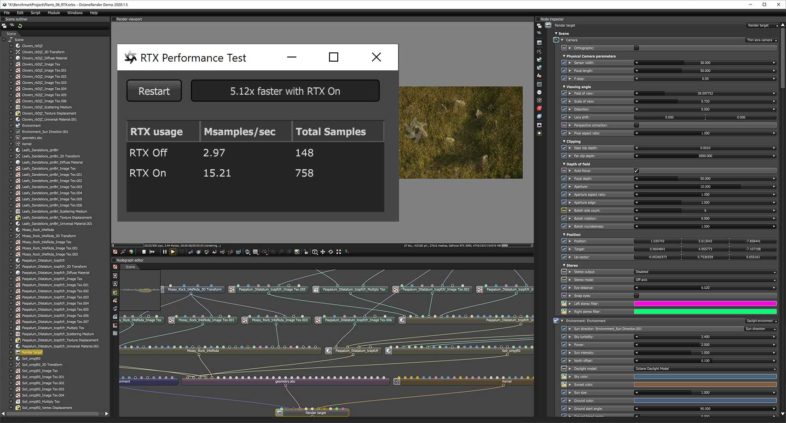

Octane is a render engine that explicitly uses RTX to do ray traced rendering, and all three scenes I tried were 2-5 times faster with RTX enabled. I expect we will see these kinds of performance improvements in any other 3D applications that take the time to properly implement RTX ray tracing acceleration.

I also tried a few of my games, but realized that nothing I play is graphics intensive enough to see much difference with this card. I mostly play classic games from many years ago, that aren’t even taxing the GPU heavily at 8K resolution. I may test Fortnite for my follow on benchmarking article, as that program will benefit from both RTX and DLSS, in HDR, potentially at 8K.

From a video editor’s perspective, most of us are honestly not GPU bound in our workflows, even at 8K, at least in terms of raw processing power. Where we will see significant improvements is in system bandwidth as we move to PCIe 4.0, and from the added memory on the cards, for processing larger frames, in both resolution and bit depth. The content creators who will see the most significant improvements from the new GPUs are those working with true 3D content. Between Optics, RTX, DLSS, and a host of other new technologies, and more processing cores and memory, they will have dramatically more interactive experiences when working with complex scenes, at higher levels of real-time detail.

From a video editor’s perspective, most of us are honestly not GPU bound in our workflows, even at 8K, at least in terms of raw processing power. Where we will see significant improvements is in system bandwidth as we move to PCIe 4.0, and from the added memory on the cards, for processing larger frames, in both resolution and bit depth. The content creators who will see the most significant improvements from the new GPUs are those working with true 3D content. Between Optics, RTX, DLSS, and a host of other new technologies, and more processing cores and memory, they will have dramatically more interactive experiences when working with complex scenes, at higher levels of real-time detail.

Do You need Ampere?

So who needs these, and which card should you get? Anyone who is currently limited by their GPU could benefit from one, but the benefits are greater for people processing actual 3D content. Honestly, the 3070 should be more than enough for most editor’s needs, unless you are debayering 8K footage, or doing heavy grading work on 4K assets. The step up to the 3080 is 40% more money for 50% more cores, and 25% more memory. Therefore 3D artists who need the extra cores will find this to be easily worth the cost. The step up to the 3090 is twice the price, for more than twice the memory, but only 20% more processing cores. So this upgrade is all about larger projects and frame sizes, as it won’t improve 3D processing by nearly as much, unless your data would have exceeded the 3080’s 10GB memory limit. There are also the size considerations for the 3090: do you have the slots, physical space, and power to install a 350W beast? Other vendors may create different configurations, but they are going to be imposing, regardless of the details. So while I love the new cards, due to my fascination with high end computing hardware, I personally have trouble taxing this beast’s capabilities enough with my current system to make it worth it. We will see if balancing it with a new workstation CPU will allow Premiere to really take advantage of the extra power it brings to the table. Many video editors will be well served by the mid-level options that are presumably coming soon. But for people doing 3D processing that stretch their current graphics solutions to their limits, upgrading to new Ampere GPUs will make a dramatic difference in how fast you can work.