This is the first in a series of articles where we are going to explore computer networks, and how to get the most out of them in the post production environment. Networking is very IT centric, and not the most exciting topic in the world of media creation, but it has a huge impact on our workflow possibilities, and how we collaborate with others. There are many different networking technologies out there, and the aim of this series is to help readers identify which ones are most relevant to their situation, and better understand how to deploy and use them, to improve how they do their work.

Why Networking?

Large projects require collaboration between multiple people who need to be able to communicate. Large digital projects require collaboration between computer systems that can communicate. Computers communicate over a network, and this communication is leveraged in a number of different forms. File servers allow multiple editing workstations to reference the same footage without each system needing to have a separate local copy, saving storage space. Because they all reference the same copy, any changes made to that source file will be reflected on all the systems, aiding in collaboration. Processing tasks can be distributed to other systems, allowing operators to continue working on their own systems unhindered during renders and exports. Work can be share on the network for feedback and approvals. And now with IP Video and NDI, the network is even being used to send the output picture to the displays or recorder while playing back. So there are lots of ways that editors and post production professionals can use networking to make their tasks easier and more efficient. Higher network bandwidths open up more workflow options, and speed up transfer tasks. And the more you know about networks, the more effectively you can deploy and utilize them.

Ethernet

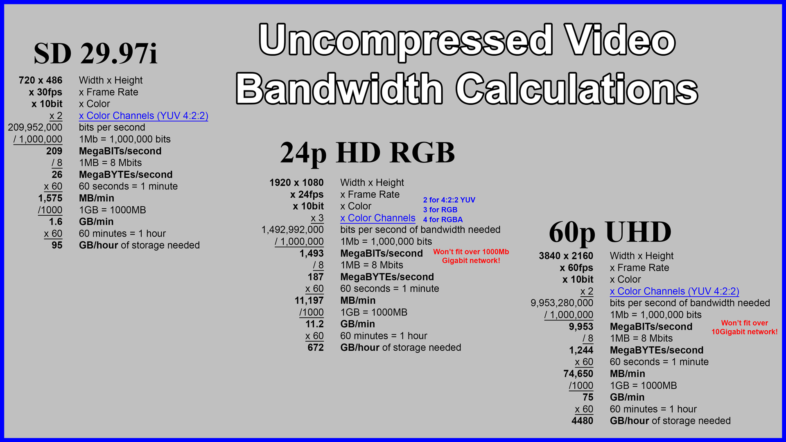

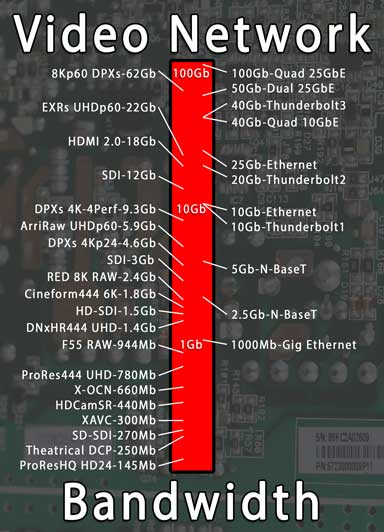

Ethernet has been the dominant form of computer networking for nearly forty years. Ethernet has evolved significantly since its original introduction, starting with coaxial cable connections, soon replaced by twisted pair copper wire, and then to fiber optics for longer distances and higher bandwidths. Data transmission rates have increased by many orders of magnitude as well, from 10 Megabits (Million bits) per second to 100 Gigabits (Billion bits) per second. As available bandwidth has increased, new collaboration possibilities become available. Everything from email to teleconferencing depends on our Ethernet networks. But video media is really what has the highest bandwidth demands. so video editors and other content creators are the ones who have the most to gain from advances in networking speeds. Video can be stored and transmitted in many different forms. A low resolution video call might require less than 1 megabit, uncompressed 8K content requires 24Gigabit (24,000Megabits). With this level of possible variation, how does one know how much bandwidth they should expect to need? Uncompressed video data rates are directly tied to resolution and framerate, and fairly easy to calculate:

If we apply the same approach to 1080p60 at 10bit 422, we see that 1920x1080x60x10x2 is 2.5 billion, so a 2.5Gigabit data-rate. This won’t playback over a gigabit network, but does fit in a 3G-SDI stream. So we can see why using networks to move around uncompressed video data CAN require a lot of bandwidth.

Video Compression

Video Compression

Fortunately, there is another option, in the form of video compression. By processing the video data on both ends, it is possible to decrease how much bandwidth is required to move or store it. There are many different types of compression, designed for varying needs and use cases, usually referred to as codecs (enCOde/DECode). Some codecs compress the data more, at the expense of processing time and image quality, while other compress it less, using more bandwidth and storage to preserve quality. Some codec bandwidths are dependent on resolution and frame rate, while others are totally independent of those variables. A 10Mb H.265 stream has the same bandwidth requirements, regardless of whether the content is HD, 4K, or even 8K. Other codecs, like ProRes or DNxHR change their bit-rate depending on the video they are encoding. Fortunately most post-production workflows involve some level of video compression these days, usually due to storage requirements more than bandwidth concerns, but it works in our favor. Cameras record from 24Mb AVCHD, to 100Mb AVC-Intra, to 300Mb XAVC, to 700Mb Sony Venice OCN, 1500Mb R3D files. Editing rarely involves more than 4-3 streams of footage, so if we multiply that by 5, we should have a safe margin for heavy editing, and simple edits only use 2 streams during a transition, so they might get away with half that bandwidth. Dedicated VFX work frequently is done on uncompressed data, but usually doesn’t require real time playback, beyond what can be cached on the local system, since it is being processed before display. But anytime we are rendering, greater than real-time bandwidth is still advantageous, if the system can keep up with the data being delivered.

Some codecs, usually wavelet based, can be decoded at lower resolutions without needing the entire data stream. In these cases, playing at lower resolutions will greatly reduce your bandwidth needs. Other codecs need to decode the entire frame before it can be scaled to a lower resolution for easier processing. Most codecs optimized for editing support fractional decode, while delivery and streaming codecs (H.264, HEVC) do not. But those streaming codecs are usually low enough bit-rates already, that network bandwidth is not the limiting factor. For high bandwidth uncompressed streams, the first concern is storage performance. Until SSDs came into widespread use, generating enough data to exceed the capacity of gigabit networks required an array of hard disks working together as a RAID, and these high value items were usually shared between machines for most efficient aggregate performance. Now a small laptop SSD can easily saturate a 10GbE connection, so the world in which your network exists has changed dramatically recently. In many cases, network bandwidth is the primary factor limiting performance.

Gigabit Ethernet

I am assuming that anyone reading this, who works with video over a network, is already using at least 1 Gigabit networking gear, so there is no reason to discuss anything below that level of performance. Gigabit Ethernet has been the most popular way to network computers for over 15 years, and allows data transfers up to 125MB/second. Most gigabit networks run on Twisted-pair copper cables, rated as Cat5e, Cat6, or Cat6A, with plastic RJ45 heads. This is referred to as 1000Base-T. (1000Mb Twisted Pair)

Twisted pair is a great solution for gigabit runs up to 100 meters. Longer range network links, between buildings and locations, run on fiber optic cables. These have a variety of connectors, but the most popular is LC tips inserted into SFP (Small Form-factor Pluggable) transceivers.

Twisted pair is a great solution for gigabit runs up to 100 meters. Longer range network links, between buildings and locations, run on fiber optic cables. These have a variety of connectors, but the most popular is LC tips inserted into SFP (Small Form-factor Pluggable) transceivers.

10-Gigabit Options

But many people know very little about what is available on the market beyond the ubiquitous Gigabit Ethernet gear. 10Gigabit Ethernet (10GbE for short) comes in a variety of different forms, making it a bit more complicated than Gigabit Ethernet, which might be a factor that inhibits users from stepping up to that technology. The biggest challenge that 10GbE presents, from a technical standpoint, is that it requires too high of a frequency for simple serial transmission over twisted pair wiring. A number of different solutions have been developed in response to this issue, with very different approaches. The first is to use fiber optics instead of wires, which allow higher frequencies. This is a simple solution, but the trade-off with this approach is much higher costs. This has led to a number of copper based solutions.  The first was to run a number of parallel lower frequency lines together, usually in the form of CX4. This was cheap, but limited to short runs (under 15′) with thick cumbersome cables. The next was 10GBase-T, which uses lots of signal processing to encode the data and send it over twisted pair wires, (Cat6 or above) similar to existing Gigabit Ethernet equipment. This signal processing requires expensive hardware, and burns energy generating heat, but installation and operation is very similar to existing Gigabit Ethernet, making it popular for that reason.

The first was to run a number of parallel lower frequency lines together, usually in the form of CX4. This was cheap, but limited to short runs (under 15′) with thick cumbersome cables. The next was 10GBase-T, which uses lots of signal processing to encode the data and send it over twisted pair wires, (Cat6 or above) similar to existing Gigabit Ethernet equipment. This signal processing requires expensive hardware, and burns energy generating heat, but installation and operation is very similar to existing Gigabit Ethernet, making it popular for that reason.  For short runs, cheap copper TwinAx cables can now be used as direct replacements for fiber optic transceivers in SFP+ ports, which have now replaced CX4 for in-rack connections between servers and switches. Lastly, the most recent approach, is to reduce the data rate by 50 or 75%, to allow use of lower quality (Cat5e) existing copper wires, but still at greater than Gigabit speeds. This is called N-BaseT, and has become more popular in the last year or so, bringing greater-than-gigabit speeds to more end users than all of the other technologies combined.

For short runs, cheap copper TwinAx cables can now be used as direct replacements for fiber optic transceivers in SFP+ ports, which have now replaced CX4 for in-rack connections between servers and switches. Lastly, the most recent approach, is to reduce the data rate by 50 or 75%, to allow use of lower quality (Cat5e) existing copper wires, but still at greater than Gigabit speeds. This is called N-BaseT, and has become more popular in the last year or so, bringing greater-than-gigabit speeds to more end users than all of the other technologies combined.

Wi-Fi

Technically there is another widely available alternative to Ethernet, in the form of Wi-Fi. Of course the main difference here, is that it is wireless, opening up some interesting options. While the maximum data rates of Wi-Fi have increased to ridiculous levels recently, exceeding Gigabit Ethernet, those maximums are rarely experienced in real world operation. While Wi-Fi connections may be viable for users editing H.264 or HEVC compressed bit-streams directly over the network, it is not going to replace wired Ethernet for users who need reliable access to large files over the network. While there is a story that could be written about testing performance of the newest wireless routers for editing video over a network, that is not where this series is headed. We will instead be focused on network speeds significantly exceeding the standard Gigabit Ethernet, and the workflow possibilities that those technologies open up, for better collaboration and lower costs.