Newtek announced their “Network Device Interface” (NDI) protocol back in 2016, as a way to transfer HD video signals in real-time over IP networks. It was designed to replace SDI transmission and routing of high fidelity video signals, usually within a facility. SDI was used to connect tape decks, computers, and monitors to routers and switches, which would direct and process the signal. The vision was to replace all of that coaxial cabling infrastructure with IP based ethernet packets, running on the same networks and cabling that were already needed to support the data networks. NDI is a compressed alternative to the SMTPE 2022 and 2110 standards which require much more bandwidth for uncompressed video routing over 10GbE. NDI uses its own codec to compress video around 15:1, allowing it to be utilized over existing Gigabit networks. There is also an “HX” variant of NDI that utilizes a lower bitrate H.264 based level of compression, optimized for higher resolutions and wireless networks.

There are now a variety of products, both hardware and software, that utilize NDI to communicate between different stages of a workflow, usually targeted towards live production. But the software tool-set that has been developed to allow those workflows, offers a number of other interesting options, when used slightly out of its intended context. Newtek offers the basic NDI software tool set as a free download on their website, and it includes, among other things:

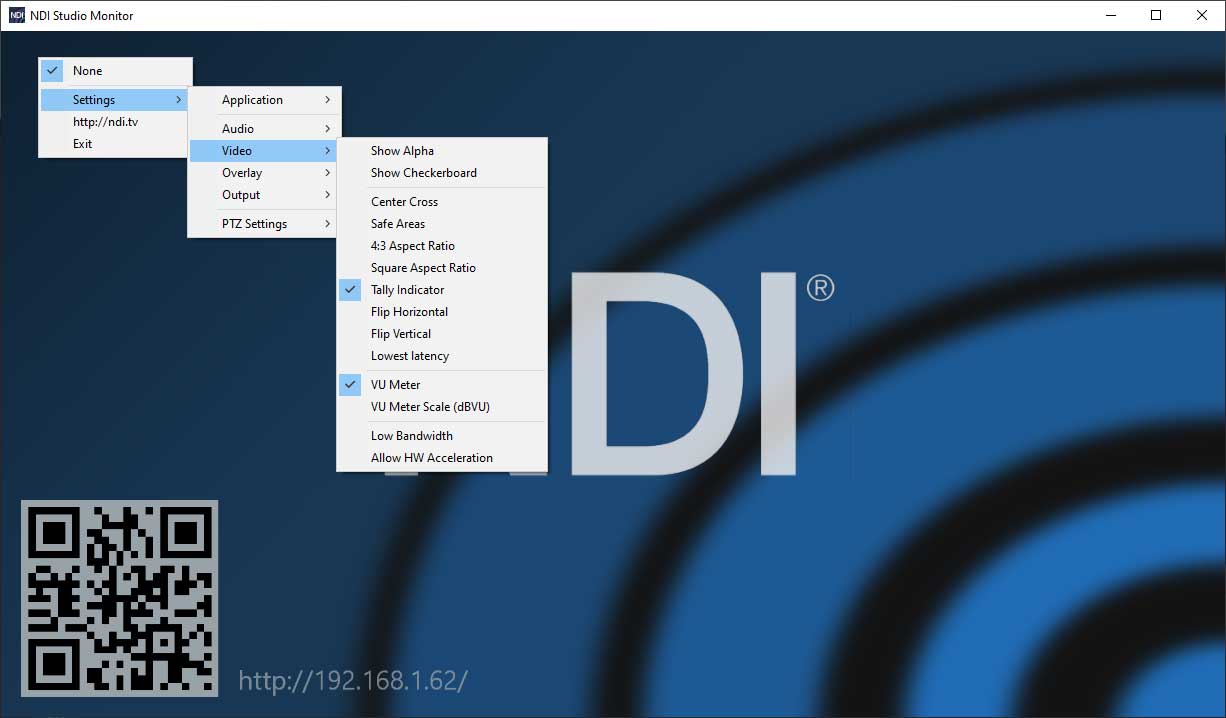

NDI Studio Monitor-allows monitoring and recording of any available NDI stream on the network, and can overlay streams together with other modifications, and re-transmit to the network.

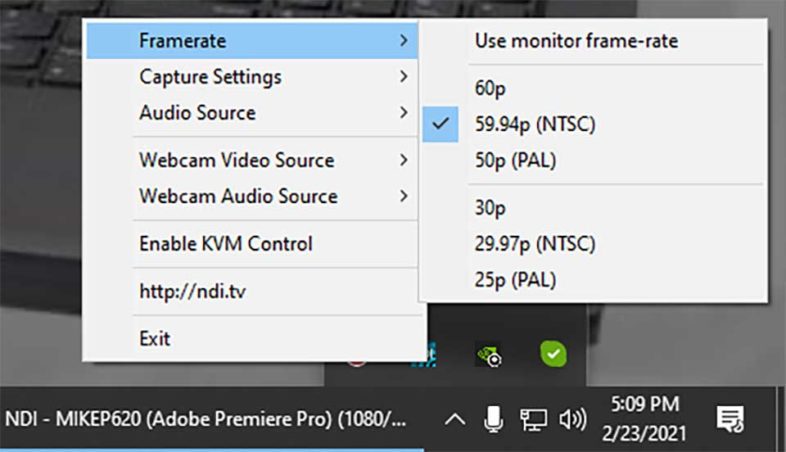

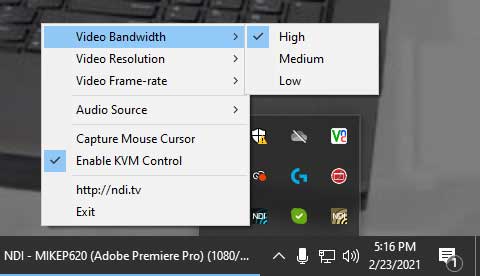

NDI Screen Capture-captures the images from your desktop display(s) and outputs them as NDI channels on the network. Also supports KVM control over that channel on Windows.

NDI Screen Capture HX-captures the images from your desktop display to a more compressed NDI HX stream, via GPU based NVENC for better performance, with KVM option.

NDI Screen Capture HX-captures the images from your desktop display to a more compressed NDI HX stream, via GPU based NVENC for better performance, with KVM option.

NDI Virtual Input-Converts any NDI source into input as a webcam on your system, allowing that source to be streamed over video telecommunication apps.

NDI Virtual Input-Converts any NDI source into input as a webcam on your system, allowing that source to be streamed over video telecommunication apps.

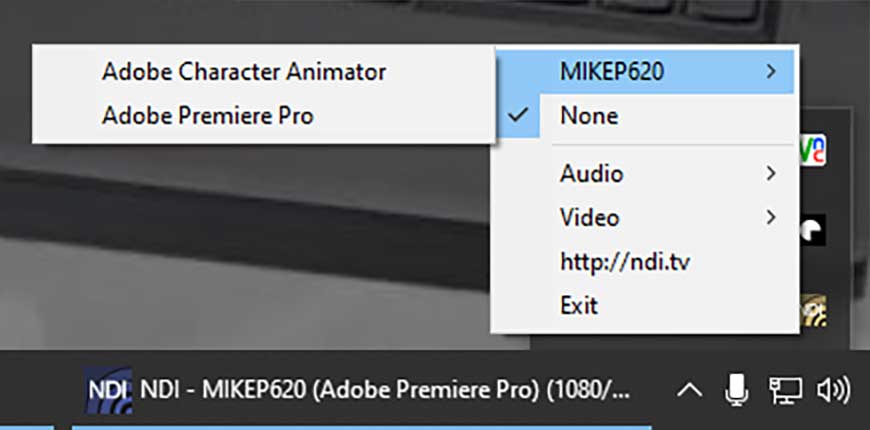

NDI for Adobe Creative Cloud-plugin that allows application output from Premiere Pro, After Effects, or Character Animator to be transmitted as an NDI stream. Also allows import and editing of files from captured NDI streams.

This software tool-set offers a number of interesting workflow possibilities, for remote groups, or just individuals working between multiple systems, or even on a single system. Basically NDI sources take video data from an application, compress it into the NDI protocol, and make it available to other resources on the network. NDI receivers or viewers take that data stream from the network or another application on the local system, and display it or make it available in another program. NDI Virtual Input is a receiver tool that allows nearly any application that supports web camera input to utilize a live NDI feed. NDI Screen Capture turns your GPU output into an NDI feed that is available to any NDI receiver. The Creative Cloud plugin turns your Adobe application into an NDI source. The Studio monitor can be both, processing and displaying NDI feeds that are coming in, and becoming an NDI source of the resulting data.

This makes it much easier to generate high end tutorials and other screen capture content, combined in real time for streaming, instead of editing them together in post. You can easily switch between the application UI and the program output when demonstrating a software function, or using Character Animator to generate an avatar of your webcam input, for gaming streamers.

There are also applications to utilize NDI on other devices, like HX Capture and HX Camera for iPhone, streaming their screen or camera feed as an NDI source over the WiFi connection, and NDI Monitor TV allowing you to view NDI streams via an Apple TV 4K. One of the key benefits of NDI is the widespread support, allowing otherwise incompatible products to pass images between each other.

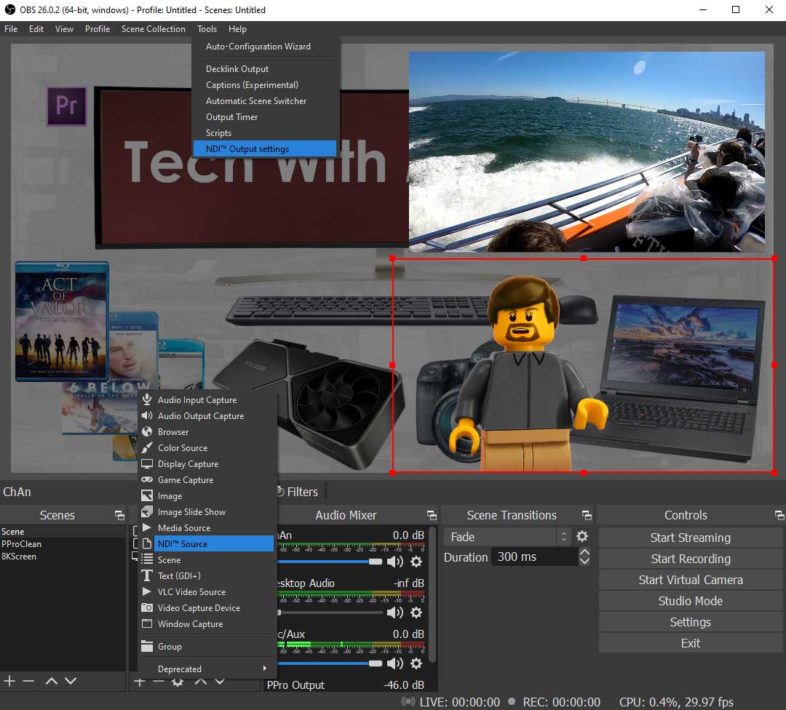

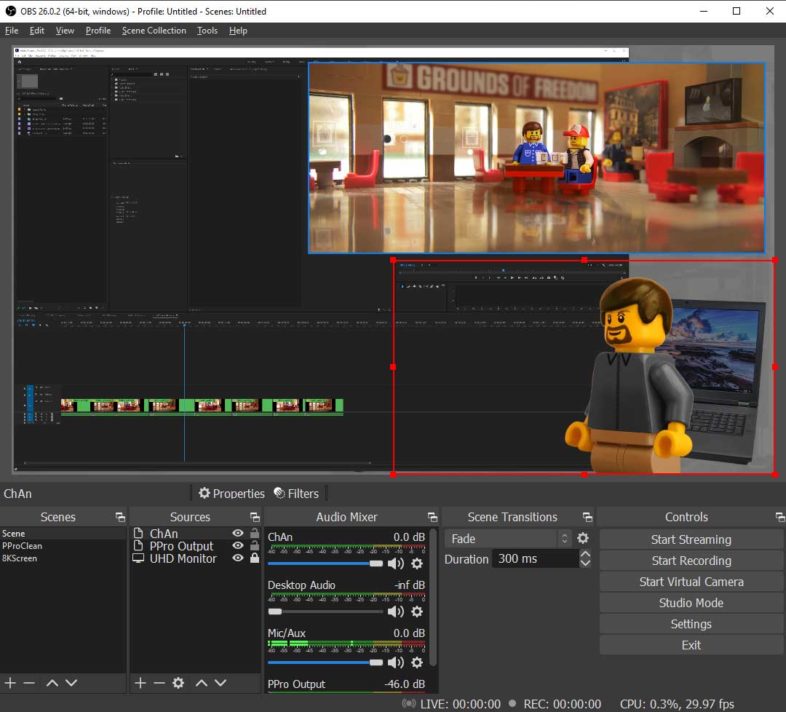

Newtek sells a variety of products that enable and leverage NDI workflows, from their Spark SDI interfaces to their Tricaster production switching solutions. There are also other vendors like BirdDog and Magewell that make their own NDI based hardware products, and companies like SIenna and vMix that have NDI integrated into their software. It is also supported in applications like OBS, which I have been using it with. OBS supports bringing in any NDI feed as a source for your program, and can also output its own NDI feed of the mixed stream.

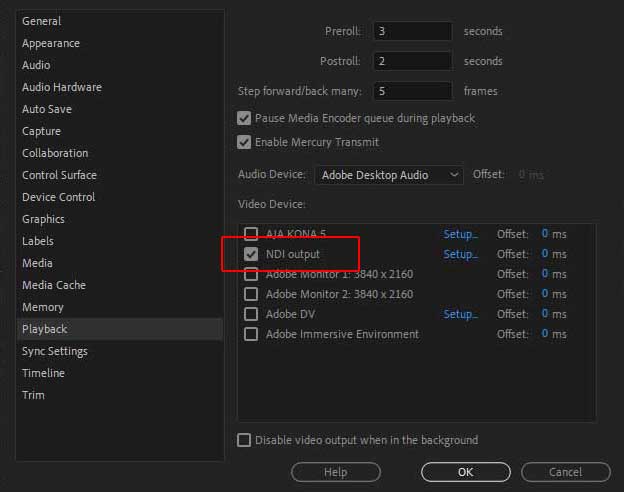

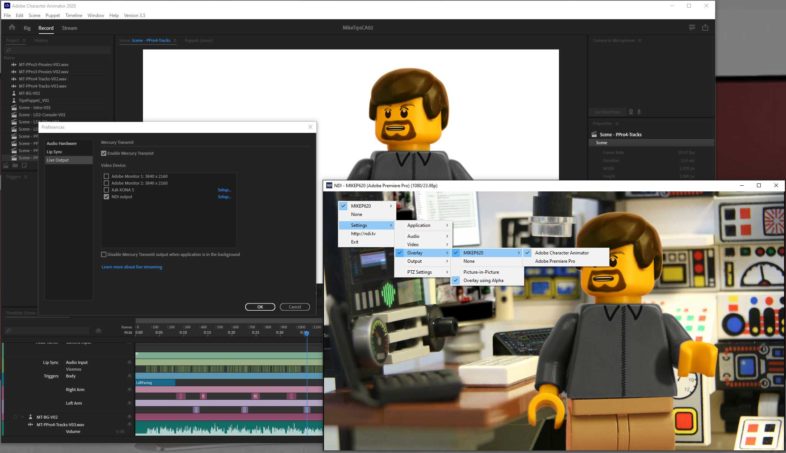

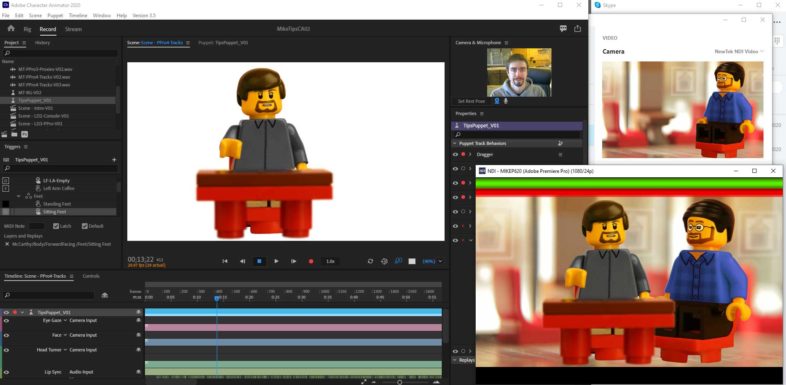

I do very little work in real-time, but used to use SDI to capture NLE output for quick encodes, and could use NDI for real-time encodes of my Character Animator projects. Setting NDI as the output in Mercury Transit, and recording that stream in NDI Studio Monitor allows real-time recording of anything you can play back in real time in Premiere Pro, After Effects, or Character Animator, with alpha channel support. It can even overlay the output from one program over another, so I could composite my Lego puppet over a screen capture or Premiere playback output in real time.

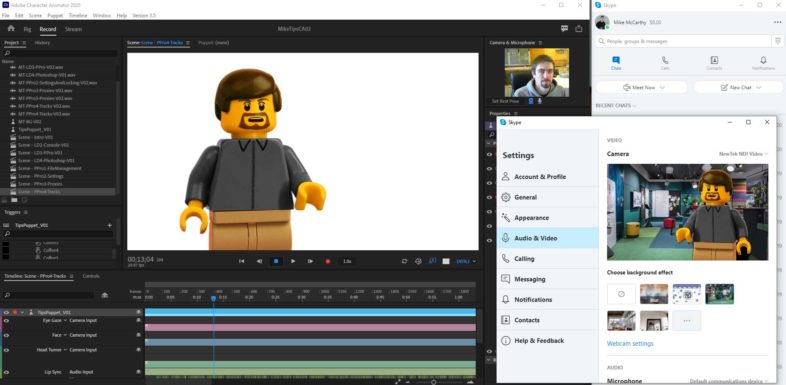

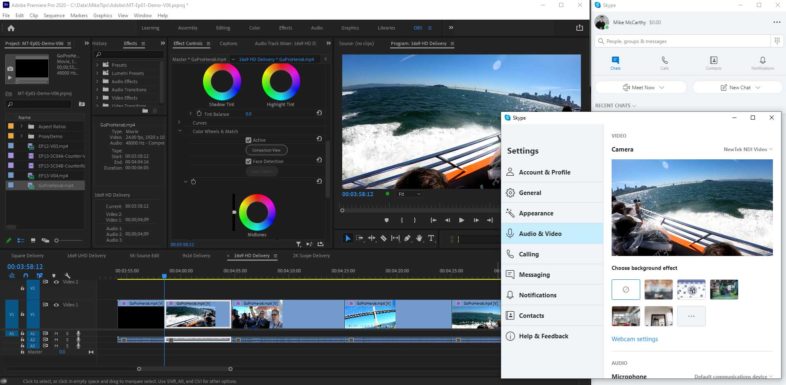

The Virtual Input function is especially interesting to me, in light of the fact that so many people are working remotely now, which I have been doing for a while. Usually my boss and I, who are thousands of miles apart, just pass projects back and forth, that link to duplicate media. And I use VNC over a VPN to operate his system when needed, which offers limited collaboration. But the ability to combine NDI output from Premiere Pro with the Virtual Input, allows me to stream the output from my timeline to my boss over Skype, for instant feedback of changes I make to a clip or sequence. He is seeing a compressed version of the output, but I have easier access to the software UI and better responsiveness than when using VNC.

And combining a number of these functions with OBS, and possibly a second system, I could make some great software tutorials in real-time. Scan Converter allows my display output to be sent to a second system on the network, combined with a Character Animator render of my mini-figure avatar, and my Premiere or AE output, using the NDI output for Creative Cloud, a live stream can be cut together from those sources. This could theoretically be done on a single system, with at least two displays, one for the demoed app and the other to control the switching and streaming, but NDI allows it to just as easily be run from a second system. And I could dedicate a third system to Character Animator if needed, and NDI would allow me to seamlessly link it in as long as they are all on the same network. Gaming streamers use a similar setup on Twitch, but NDI makes that much easier than it otherwise would be.

An even more interesting application of a number of these utilities would be to combine them across locations. I am looking at producing a live animated podcast of sorts, with someone else who would be remote. They would run their puppet in Character Animator, and output over NDI to a Virtual camera, that would be their source in Skype or Zoom. This is how you animate yourself for video calls, but we can take it a step further. If I connect to a call with him, I should then be able to screen capture his feed via Region of Interest, to an NDI feed on my system. Once I have his puppet and background as an NDI feed, I can do the same thing in Character Animator with a puppet of my own, and output it with no background as a separate NDI feed. I can then composite those two sources in Studio Monitor, or OBS for more flexibility, and stream or record the result. The outcome of all this, is that he and I discuss something as if we were on a teleconference call with each other, and an animated video of both of us in the same frame is created live, all through the magic of Character Animator, the internet, and NDI.

So it is easy to see that the flexibility of NDI opens all sorts on interesting workflow possibilities to explore, in a variety of different applications. I am super excited to see where it leads, as new developments are released, and more applications and features are supported.

Very good post. I’m experiencing a few of these issues as well..