The Event Itself

Adobe MAX 2018 took place last week in Los Angeles. The huge conference takes over the LA convention center and overflows into the surrounding venues. This was the first year I have attended the event. It started off Monday morning with a two and a half hour keynote outlining the newest developments and features being released in the newest updates to Adobe’s Creative Cloud applications. This was followed by all sorts of smaller sessions and training labs for attendees to dig deeper into the new capabilities of the various tools and applications. The South Hall is filled with booths from various hardware and software partners. There is more available than any one person could possibly take in. Tuesday started off with some early morning hands on labs, followed by a second keynote presentation about creative and career development. I got a front row seat to see five different people successful in their creative fields, including director Ron Howard, discuss their approach to their work and life.  The rest of the day was packed with various briefings, meetings and interviews, to the point that I didn’t get to actually attend any of the classroom sessions.

The rest of the day was packed with various briefings, meetings and interviews, to the point that I didn’t get to actually attend any of the classroom sessions.

By Wednesday, the event was beginning to wind down, but there were still a plethora of sessions and other options for attendees to split their time between. I presented the workflow for my most recent project Grounds of Freedom at NVidia’s booth in the community pavilion, and spent the rest of the time connecting with other hardware and software partners who had a presence in the pavilion.

Adobe has released updates for most of its creative applications to coincide with the event. Many of the most relevant new updates to the video tools were previously announced at IBC in Amsterdam last month, so I won’t repeat those, but there are still a few new video ones, as well as many relevant updates that are broader in scope in regards to media as a whole.

Adobe Premiere Rush

Adobe Premiere Rush

The biggest video-centric announcement is Adobe Premiere Rush, which offers simplified video editing workflows for mobile devices and PCs. Currently releasing on iOS as well as Mac and Windows, with Android to follow in the future, it is a cloud enabled application, with the option to offload much of the processing from the user device. It will also integrate with Team Projects for greater collaboration in larger organizations, and Rush projects can be moved into Premiere Pro for finishing once you are back on the desktop. It is free to start using, but most functionality will be limited to subscription users.

Let’s keep in mind that I am a finishing editor for feature films, so my first question (as a Razr-M user) was: “who wants to edit video on their phone?” But what about if they shot the video on their phone? I don’t do that, but many people do, so I know this will be a valuable tool for them. This has gotten me thinking about my own mentality towards video. I think if I was a sculptor, I would be sculpting stone, while many people are sculpting with clay or silly putty. Because of that, I have trouble sculpting in clay, and would see little value in tools that are only able to sculpt clay. But there is probably benefit to being well versed in both. I would have no trouble showing my son’s first year video compilation to a prospective employer, because it is just that good, since I don’t make anything less than that. But there was no second year video, even though I have the footage, because that level of work takes way too much time. So I need to break free from that mentality, and get better at producing content that is “sufficient to tell a story” without being “technically and artistically flawless.” Learning to use Adobe Rush might be a good way for me to take a step in that direction. As a result, we may eventually see more videos in my articles as well. The current ones took me way too long to produce, but Adobe Rush should allow us to create content in a much shorter time-frame, if we are willing to compromise a bit on the precision and control offered by Premiere Pro and After Effects.

Let’s keep in mind that I am a finishing editor for feature films, so my first question (as a Razr-M user) was: “who wants to edit video on their phone?” But what about if they shot the video on their phone? I don’t do that, but many people do, so I know this will be a valuable tool for them. This has gotten me thinking about my own mentality towards video. I think if I was a sculptor, I would be sculpting stone, while many people are sculpting with clay or silly putty. Because of that, I have trouble sculpting in clay, and would see little value in tools that are only able to sculpt clay. But there is probably benefit to being well versed in both. I would have no trouble showing my son’s first year video compilation to a prospective employer, because it is just that good, since I don’t make anything less than that. But there was no second year video, even though I have the footage, because that level of work takes way too much time. So I need to break free from that mentality, and get better at producing content that is “sufficient to tell a story” without being “technically and artistically flawless.” Learning to use Adobe Rush might be a good way for me to take a step in that direction. As a result, we may eventually see more videos in my articles as well. The current ones took me way too long to produce, but Adobe Rush should allow us to create content in a much shorter time-frame, if we are willing to compromise a bit on the precision and control offered by Premiere Pro and After Effects.

Rush allows up to four layers of video, with various effects and 32bit Lumetri color controls, as well as AI based audio filtering for noise reduction and de-reverb, and lots of preset motion graphics templates for titling and such. It should allow simple videos to be edited relatively easily, with good looking results, shared directly to Youtube, Facebook, and other platforms. While it doesn’t fit into my current workflow, I may need to create an entirely new “flow” for my personal videos, and this seems like an interesting place to start, once they release an Android version, and I get a new phone.

Rush allows up to four layers of video, with various effects and 32bit Lumetri color controls, as well as AI based audio filtering for noise reduction and de-reverb, and lots of preset motion graphics templates for titling and such. It should allow simple videos to be edited relatively easily, with good looking results, shared directly to Youtube, Facebook, and other platforms. While it doesn’t fit into my current workflow, I may need to create an entirely new “flow” for my personal videos, and this seems like an interesting place to start, once they release an Android version, and I get a new phone.

![]() Photoshop Updates

Photoshop Updates

There is a new version of Photoshop released nearly every year, and most of the time I can’t tell the difference between the new and the old. This year’s differences will probably be a lot more apparent to most users after a few minutes of use. The Undo command works like other apps, instead of being limited to toggling the last action. Transform operates very differently, in that they made proportional transform the default behavior instead of requiring users to hold Shift every time they scale, allowed the anchor point to be hidden to prevent people from moving the anchor instead of the image, and they removed the “commit changes” step at the end. All positive improvements in my opinion, that might take a bit of getting used to for seasoned pros. There is also a new Framing Tool, which allows you to scale or crop any layer to a defined resolution. Maybe I am the only one, but I frequently find myself creating new documents in PS just so I can drag the new layer that is preset to the resolution I need, back into my current document. For example, I need a 200x300px box in the middle of my HD frame, how else do you do that currently? This Framing tool should fill that hole in the features for more precise control over layer and object sizes and positions (As well as provide its intended easily adjustable non-destructive masking.).

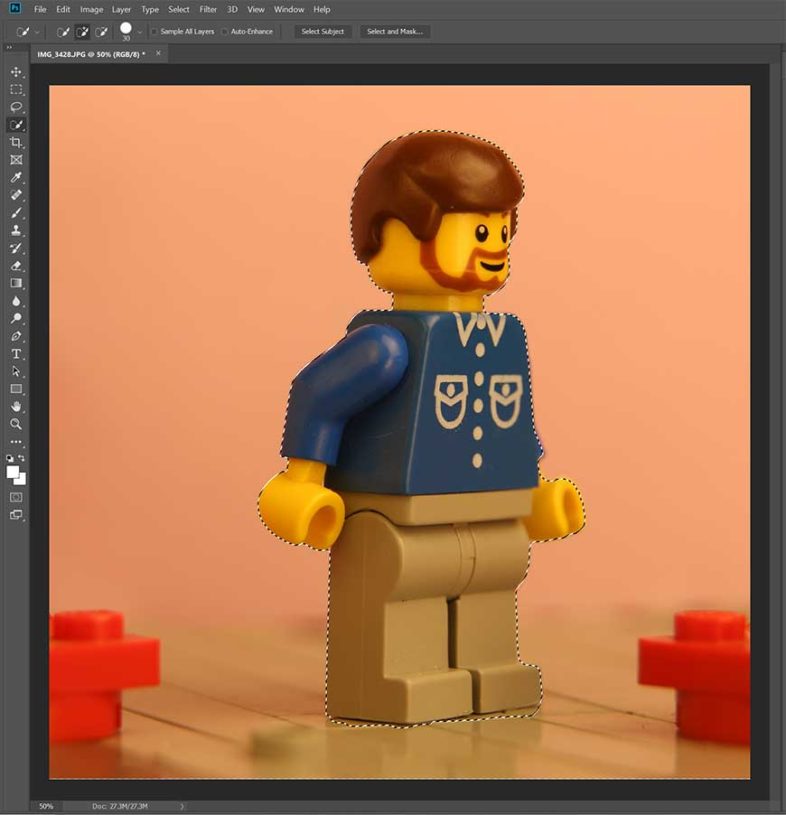

They also showed of a very impressive AI-based auto selection of the subject or background. It creates a standard selection that can be manually modified anywhere that the initial attempt didn’t give you what you were looking for. Being someone who gives software demos, I don’t trust prepared demonstrations, so I wanted to try it for myself, and a real world asset.  I opened up one of my source photos for my animation project, and clicked the “Select Subject” button, with no further input, and got this result. It needs some cleanup at the bottom, and refinement in the newly revamped “Select & Mask” tool, but this is a huge improvement over what I had to do on hundreds of layers earlier this year. They also demonstrated a similar feature they are working on for video footage in Tuesday night’s Sneak previews. Named “Project Fast Mask,” it automatically propagates masks of moving objects through video frames, and while not released yet, looks promising. Combined with the content aware background fill for video that Jason Levine demonstrated in AE during the opening keynote, basic VFX work is going to get a lot easier.

I opened up one of my source photos for my animation project, and clicked the “Select Subject” button, with no further input, and got this result. It needs some cleanup at the bottom, and refinement in the newly revamped “Select & Mask” tool, but this is a huge improvement over what I had to do on hundreds of layers earlier this year. They also demonstrated a similar feature they are working on for video footage in Tuesday night’s Sneak previews. Named “Project Fast Mask,” it automatically propagates masks of moving objects through video frames, and while not released yet, looks promising. Combined with the content aware background fill for video that Jason Levine demonstrated in AE during the opening keynote, basic VFX work is going to get a lot easier.

There are also some smaller changes to the UI, allowing math expressions in the numerical value fields, and making it easier to differentiate similarly named layers, by showing the beginning and end of the name if it gets abbreviated. They also added a function to distribute layers spatially based on the space between them, which accounts for their varying sizes, compared to the current solution which just evenly distributes based on their reference anchor point.

In other news, Photoshop is coming to iPad, and while that doesn’t affect me personally, I can see how this could be a big deal for some people. They have offered various trimmed down Photoshop editing applications for iOS in the past, but this new release is supposed to be based on the same underlying code as the desktop version, and will eventually replicate all functionality, once they finish adapting the UI for touchscreens.

New Apps

Adobe also showed off Project Gemini, which is a sketch and painting tool for iPad that sits somewhere between Photoshop and Illustrator. (Hence the name, I assume) This doesn’t have much direct application to video workflows, besides that it can record time-lapses of a sketch, which should make it easier to make those “white board illustration” videos that are becoming more popular.

Adobe also showed off Project Gemini, which is a sketch and painting tool for iPad that sits somewhere between Photoshop and Illustrator. (Hence the name, I assume) This doesn’t have much direct application to video workflows, besides that it can record time-lapses of a sketch, which should make it easier to make those “white board illustration” videos that are becoming more popular.

Project Aero is a tool for creating AR (or Augmented Reality) experiences, and I can envision Premiere and After Effects being critical pieces in the puzzle for creating the visual assets that Aero will be placing into the augmented reality space. This one is the hardest one for me to fully conceptualize, as I know Adobe is creating a lot of supporting infrastructure behind the scenes, to enable the delivery of AR content in the future, but I haven’t yet been able to wrap my mind around a vision of what that future will be like. VR I get, but AR is more complicated because of its interface with the real world, and due to the variety of forms in which it can be experienced by users. Similar to how web design is complicated by the need to support people on various browsers and cell phones, AR needs to support a variety of use cases and delivery platforms. But Adobe is working on the tools to make that a reality, and Project Aero is the first public step in that larger process.

Project Aero is a tool for creating AR (or Augmented Reality) experiences, and I can envision Premiere and After Effects being critical pieces in the puzzle for creating the visual assets that Aero will be placing into the augmented reality space. This one is the hardest one for me to fully conceptualize, as I know Adobe is creating a lot of supporting infrastructure behind the scenes, to enable the delivery of AR content in the future, but I haven’t yet been able to wrap my mind around a vision of what that future will be like. VR I get, but AR is more complicated because of its interface with the real world, and due to the variety of forms in which it can be experienced by users. Similar to how web design is complicated by the need to support people on various browsers and cell phones, AR needs to support a variety of use cases and delivery platforms. But Adobe is working on the tools to make that a reality, and Project Aero is the first public step in that larger process.

Community Pavilion

Adobe’s partner companies in the Community Pavilion were showing off a number of new products. Dell has a new 49″ IPS monitor, the U4919DW, which is basically the resolution and desktop space of two 27″ QHD displays without the seam (5120×1440 to be exact). HP was showing off their recently released ZBook Studio x360 convertible laptop workstation there, which I will be posting a review of soon, as well as their Zbook X2 tablet, and the rest of their Z workstations. NVidia was showing off their new Turing based cards with 8K Red decoding acceleration, Ray tracing in Adobe Dimension, and other GPU accelerated tasks. AMD was showing off 4K Red playback on a MacBookPro with an eGPU solution, and CPU based ray-tracing on their Ryzen systems. The other booths spanned the gamut from GoPro cameras and server storage devices, to paper stock products for designers. I even won a Thunderbolt 3 docking station at Intel’s booth. (Although in the next drawing they gave away a brand new Dell Precision 5530 2-in-1 convertible laptop workstation.) Microsoft also garnered quite a bit of attention when they gave away 30 MS Surface tablets near the end of the show. But there was lots to see and learn everywhere I looked.

The Significance of MAX

The Significance of MAX

Adobe MAX is quite a significant event, and now I have been in the industry long enough to start to see the evolution of certain trends, as things are not as static as we come to expect. I have attended NAB for the last 12 years, and the focus of that show has shifted significantly away from my primary professional focus. (No Red, NVidia, or Apple booths, among many other changes) This was the first year that I had the thought “I should have gone to Sundance,” and a number of other people I know had the same impression, that it had suddenly become more significant for our profession. Adobe MAX is similar, although I have been a little slower to catch on to that change. It has been happening for over ten years, but has grown dramatically in size and significance recently. If I still lived in LA, I probably would have started attending sooner, but it was hardly on my radar until three weeks ago. Now that I have seen it in person, I probably won’t miss it in the future.