This topic has come up a lot recently, so it is time for a post dedicated to the basics of data storage for video projects. Storage is the concept of storing all of the files for a particular project or workflow. They may not all be stored in the same place, because different types of data have different requirements, different storage solutions have different strengths and features. This is an exploration of those differences, and how they can be applied to various project storage needs.

At a fundamental level, most digital data is stored on HDDs or SSDs. HDDs or Hard disk drives are mechanical devices that store the data on a spinning magnetic surface, and move read write heads over that surface to access the data. They currently max out around 200MB/s and 5ms latency. SSD stands for solid state drive, and involves no moving parts. SSDs can be built with a number of different architectures and interfaces, but most are based on the same basic flash memory technology as the CF or SD card in your camera. Some SSDs are SATA drives that use the same interface and form factor as a spinning disk, for easy replacement in existing HDD compatible devices. These devices are limited to SATA’s bandwidth of 600MB/s. Other SSDs use the PCIe interface, either in full sized PCIe cards, or the smaller M.2 form factor.  These have much higher potential bandwidths, up to 3000MB/s. Currently HDDs are much cheaper for storing large quantities of data, but require some level of redundancy for security. SSDs are also capable of failure, but it is a much rarer occurrence. Data recovery for either is very expensive. SSDs are usually cheaper for achieving high bandwidth, unless large capacities are also needed.

These have much higher potential bandwidths, up to 3000MB/s. Currently HDDs are much cheaper for storing large quantities of data, but require some level of redundancy for security. SSDs are also capable of failure, but it is a much rarer occurrence. Data recovery for either is very expensive. SSDs are usually cheaper for achieving high bandwidth, unless large capacities are also needed.

RAIDs

Traditionally hard drives used in professional contexts are grouped together for higher speeds and better data security. These are called RAIDs, which stands for Redundant Array of Independent Disks. There are a variety of different approaches to RAID design, which are very different from one another.

RAID-0 or “striping” is technically not redundant, but every file is split across each disk, so each disk only has to retrieve its portion of a requested file. Since these happen in parallel, the result is usually faster than if a single disk had read the entire file, especially for larger files. But if one disk fails, every one of your fails will be missing a part of it, making them all pretty useless. The more disks in the array, the higher the chances of one failing, so I rarely see striped arrays composed of more than 4 disks. It used to be popular to create striped arrays for high speed access to restorable data, like backed up source footage, or temp files, but now a single PCIe SSD is far faster, cheaper, smaller, and more efficient in most cases.

RAID-1 or mirroring is when all of the data is written to more than one drive. This limits the array’s capacity to the size of the smallest source volume, but the data is very secure. There is no speed benefit to writes since each drive must write all of the data, but reads can be distributed across the identical drives with similar performance as RAID-0.

RAID-4,5,&6 try to achieve a balance between those benefits, for larger arrays with more disks (Minimum 3). They all require more complicated controllers, so they are more expensive to reach the same levels of performance. RAID-4 stripes data across all but one drive, and then calculates and stores parity (odd/even) data across the data drives and stores it on the last drive. This allows the data from any single failed drive to be restored, based on the parity data. RAID-5 is similar, but the parity volume is alternated depending on the block, allowing the reads to be shared across all disks, not just the “data drives.” So the capacity of a RAID-4 or 5 array will be the minimum individual disk capacity, times the number of disks minus one. RAID 6 is similar, but stores two drives worth of parity data, which via some more advanced math than odd/even, allows it to restore the data if even two drives fail at the same time. RAID 6 capacity will be the minimum individual disk capacity, times the number of disks minus two, and is usually only used on arrays with many (>8) disks. RAID-5 is the most popular option for most media storage arrays, although RAID-6 becomes more popular as the value of the data stored increases and the price of extra drives decreases over time.

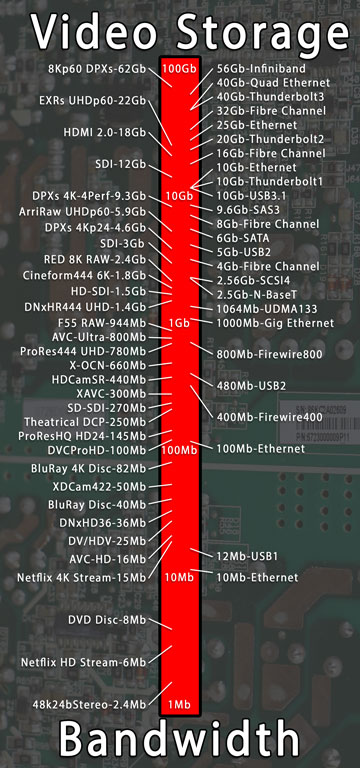

Measuring Data

Digital data is stored as a series of ones and zeros, each of which is a bit. 8-bits is one byte, which frequently represents one letter of text, or one pixel of an image (8-bit single channel). Bits are frequently referenced in large quantities to measure data rates, while bytes are usually referenced when measuring stored data. I prefer to use Bytes for both purposes, but it is important to know the difference. A Megabit(Mb) is one million bits, while a Megabyte (MB) is one million bytes, or 8 Million bits. Similar to metric, Kilo is thousand, Mega is million, Giga is billion, and Tera is trillion. Anything beyond that you can learn as you go.

Storage Bandwidth

Networking speeds are measured in bits (Gigabit) but with headers and everything else, it is safer to divide by ten when converting speed into Bytes per second. Estimate 100MB/s for Gigabit, up to 1000MB/s on 10GB, and around 500MB/s for the new N-BaseT standard. Similarly when transferring files over a 30Mb internet connection, expect around 3MB/s. Then you multiple by 60 or 3600 to get to minutes or hours. (180MB/min or 9600MB/hr in this case) So if you have to download a 10Gigabyte file on that connection, come back to check on it in an hour.

Networking speeds are measured in bits (Gigabit) but with headers and everything else, it is safer to divide by ten when converting speed into Bytes per second. Estimate 100MB/s for Gigabit, up to 1000MB/s on 10GB, and around 500MB/s for the new N-BaseT standard. Similarly when transferring files over a 30Mb internet connection, expect around 3MB/s. Then you multiple by 60 or 3600 to get to minutes or hours. (180MB/min or 9600MB/hr in this case) So if you have to download a 10Gigabyte file on that connection, come back to check on it in an hour.

Because networking standards are measured in bits, and networking is so important for sharing video files, many video file types are measured in bits as well. An 8Mb H.264 stream is 1MB per second. DNxHD36 is 36Mb/s (or 4.5MB/s when divided by 8) DV and HDV are 25Mb, DVCProHD is 100Mb, etc. Other compression types have variable bit rates depending on the content, but there still average rates we can make calculations from. Any file’s size divided by its duration will reveal its average data rate. It is important to make sure that your storage has the bandwidth to handle as many streams of video as you need, which will be that average data rate times the number of streams. So ten streams of DNxHD36 will be 360Mb or 45MB/s.

The other issue to account for is IO requests and drive latency. Lots of small requests require not just high total transfer rates, but high IO performance as well. Hard drives can only fulfill around 100 individual requests per second, regardless of how big those requests are. So while a single drive can easily sustain a 45MB/s stream, satisfying 10 different sets of requests may keep it so busy bouncing between the demands that it can’t keep up. You may need a larger array, with a higher number of (potentially) smaller disks to keep up with the IO demands of multiple streams of data. Audio is worse in this regard, in that you are dealing with lots of smaller individual files as your track count increases, even though the data rate is relatively low. SSDs are much better it handling larger numbers of individual requests, usually measured in the thousands or tens of thousands per second per drive.

Storage Capacity

Capacity on the other hand is simpler. Megabytes are usually the smallest increments of data we have to worry about calculating. A media type’s data rate (in MB/sec) times its duration (in seconds) will give you its expected file size. If you are planning to edit a feature film with 100 hours of offline content in DNxHD36, that is 3600×100 seconds, times 4.5MB/s, is 1620000MB, or 1620GB, or simply about 1.6TB. But you should add some head room for unexpected needs, and then a 2TB disk is about 1.8TB when formatted, so it will just barely fit. But it is probably worth sizing up to at least 3TB if you are planning to store your renders and exports on there as well.

Once you have a storage solution of the required capacity, there is still the issue of connecting it to your system. The most expensive options connect through the network to make them easier to share (although more is required for true shared storage) but that isn’t actually the fastest option, nor the cheapest. A large array can be connected over USB3 or Thunderbolt, or via the SATA or SAS protocol directly to an internal controller. There are also options for Fiber Channel, which can allow sharing over a SAN, but this is becoming less popular as 10GbE becomes more affordable. Gigabit Ethernet and USB3 won’t be fast enough for high bandwidth files to playback, but 10GbE, multichannel SAS, Fiber Channel, and Thunderbolt can all handle almost anything up to uncompressed 4K. Direct attached storage will always have the highest bandwidth and lowest latency, as it has the fewest steps between the stored files and the user. Host->SAS-RAID-Controller->SAS-Drives. Using Thunderbolt or USB adds another controller and hop, Ethernet even more so.

Different Types of Project Data

Now that we know the options for storage, let’s look at the data we anticipate needing to store. First off we will have lots of video footage of source media. (Either camera original files, trans-coded editing dailies, or both) This is usually in the Terabytes, but the data rates vary dramatically, from 1Mb H.264 files, to 200Mb ProRes files, to 2400Mb Red files. The data rate for the files you are playing back, combined with the number of playback streams you expect to use, will determine the bandwidth you need from your storage system. These files are usually static, in that they don’t get edited or written to in any way after creation. The exceptions would be sidecar files like RMD and XML files, which will require write access to the media volume. If a certain set of files are static, as long as a backup of the source data exists, they don’t need to be backed up on a regular basis, and don’t even necessarily need redundancy. (Although if the cost of restoring that data would be high, in regards to lost time during that process, some level of redundancy is still recommended.)

Now that we know the options for storage, let’s look at the data we anticipate needing to store. First off we will have lots of video footage of source media. (Either camera original files, trans-coded editing dailies, or both) This is usually in the Terabytes, but the data rates vary dramatically, from 1Mb H.264 files, to 200Mb ProRes files, to 2400Mb Red files. The data rate for the files you are playing back, combined with the number of playback streams you expect to use, will determine the bandwidth you need from your storage system. These files are usually static, in that they don’t get edited or written to in any way after creation. The exceptions would be sidecar files like RMD and XML files, which will require write access to the media volume. If a certain set of files are static, as long as a backup of the source data exists, they don’t need to be backed up on a regular basis, and don’t even necessarily need redundancy. (Although if the cost of restoring that data would be high, in regards to lost time during that process, some level of redundancy is still recommended.)

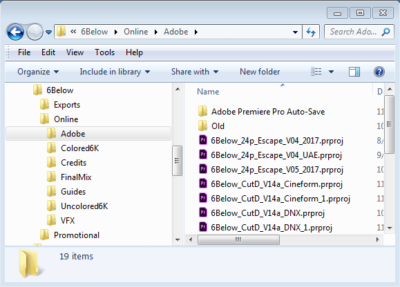

Another important set of files we will have is our project files, which actually record the “work” we do in our application. They contain instructions for manipulating our media files during playback or export. The files are usually relatively small, and are constantly changing as we use them. That means they need to be backed up on a regular basis. The more frequent the backups, the less work you lose when something goes wrong.

Another important set of files we will have is our project files, which actually record the “work” we do in our application. They contain instructions for manipulating our media files during playback or export. The files are usually relatively small, and are constantly changing as we use them. That means they need to be backed up on a regular basis. The more frequent the backups, the less work you lose when something goes wrong.

We will also have a variety of exports and intermediate renders over the course of the project. Whether they are flattened exports for upload and review, or VFX files, or other renders, these are a more dynamic set of files than our original source footage, and are generated on our systems, instead of being imported from somewhere else. These can usually be regenerated from their source projects if necessary, but the time and effort required usually makes it worth it to invest in protecting or backing them up. In most workflows, these files don’t change once they are created, which makes it easier to back them up if desired.

There will also be a variety of temp files generated by most editing or VFX programs. Some of these files need high speed access for best application performance, but they rarely need to be protected or backed up, because they can be automatically regenerated by the source applications on the fly if needed.

Choosing the Right Storage for Your Needs

So we have source footage, project files, exports, and temp files that we need to find a place for. If you have a system or laptop with a single data volume, the answer is simple, it all goes on the C-Drive. But we can achieve far better performance is we have the storage infrastructure to break those files up onto different devices. Newer laptops frequently have both a small SSD, and a larger hard disk. It that case we would want our source footage on the (larger) HDD, while the project files should go on the (safer) SSD. Usually your temp file directories should be located on the SSD as well since it is faster, and your exports can go either place, preferably the SSD if they fit. If we have an external drive of source footage connected, we can back all files up there, but should probably work from projects stored on our local system, playing back media from the external drive.

So we have source footage, project files, exports, and temp files that we need to find a place for. If you have a system or laptop with a single data volume, the answer is simple, it all goes on the C-Drive. But we can achieve far better performance is we have the storage infrastructure to break those files up onto different devices. Newer laptops frequently have both a small SSD, and a larger hard disk. It that case we would want our source footage on the (larger) HDD, while the project files should go on the (safer) SSD. Usually your temp file directories should be located on the SSD as well since it is faster, and your exports can go either place, preferably the SSD if they fit. If we have an external drive of source footage connected, we can back all files up there, but should probably work from projects stored on our local system, playing back media from the external drive.

Now a professional workstation can have a variety of different storage options available. I have a system with two SSDs and two RAIDs, so I store my OS and software on one SSD, my projects and temp files on the other SSD, my source footage on one RAID, and my exports on the other. I also back up my project folder to the exports RAID on a daily basis, since the SSDs have no redundancy.

Now a professional workstation can have a variety of different storage options available. I have a system with two SSDs and two RAIDs, so I store my OS and software on one SSD, my projects and temp files on the other SSD, my source footage on one RAID, and my exports on the other. I also back up my project folder to the exports RAID on a daily basis, since the SSDs have no redundancy.

Individual Store Solution Case Study Examples

If you have a short film project shot on Red, that you are editing natively, R3Ds can be 300MB/s. That is 1080GB/Hour, so 5 hours of footage will be just over 5TB. It could be stored on a single 6TB external drive, but that won’t give you the bandwidth to play back in real time. (Hard drives usually top out around 200MB/s) Now striping your data across two drives, in one of those larger external drives would probably provide the needed performance, but with that much data you are unlikely to have a backup elsewhere. So data security becomes more of a concern, leading us towards a RAID5 based solution. A 4 disk array of 2TB drives provides 6TB of storage at RAID5. (2TB*[4-1]) This will be more like 5.5TB once it is formatted, but that might be enough. Using an array of 8 1TB drives would provide higher performance, and 7TB of space before formatting (1TB*[8-1]) but will cost more. (8 port RAID controller, 8 bay enclosure, and two 1TB drives are usually more expensive than one 2TB drive.)

Larger projects deal with much higher numbers. Another project has 200TB of RED footage that needs to be accessible on a single volume. A 24Bay enclosure with 12TB drives provides 288TB of space, minus two drives worth of data for RAID6 redundancy (288TB-[2x12TB]=264TB) which will be more like 240TB available in Windows once it is formatted.

Sharing Storage and Files with Others

As Ethernet networking technology has improved, the benefits of expensive SAN (Storage Area Network) solutions over NAS (Network attached storage) solution has diminished. 10Gigabit Ethernet (10GbE) transfers over 1GB of data a second, and is relatively cheap to implement. NAS has the benefit of a single host system controlling the writes, usually with software included in the OS, preventing data corruption, and also isolating the client devices from the file system, allowing PC, Mac, and Linux devices to all access the same files. This comes at the cost of slightly increased latency, and occasionally lower total bandwidth. But the prices and complexity of installation are far lower. So now all but the largest facilities and most demanding workflows are being deployed with NAS based shared storage solutions. This can be as simple as a main editing system with a large direct attached array sharing its media with an assistant station, over a direct 10GbE link, for about $50. This can be scaled up by adding a switch and connecting more users to it, but the more users sharing the data, the greater the impact on the host system, and the lower the overall performance. Over 3-4 users, it becomes prudent to have a dedicated host system for the storage, for both performance and stability. Once you are buying a dedicated system, there are a variety of other functionalities offered by different vendors to improve performance and collaboration.

Bin Locking and Simultaneous Access

The main step to improve collaboration is to implement what is usually referred to as a “bin locking system.” Even with a top end SAN solution, and strict permissions controls, there is still the possibility of users over-writing each other’s work, or at the very least branching the project into two versions that can’t easily be reconciled. If two people are working on the exact same sequence at the same time, only one of their sets of changes is going to make it to the master copy of the file, without some way of reconciling the differences in those changes. (And solutions are being developed.) But usually the strategy to avoid that is to break projects down into smaller pieces, and make sure that no two people are ever working on the exact same part. This is accomplished by locking the part (or bin) of the project that a user is editing, so that no one else may edit it at the same time. This usually requires some level of server functionality, as it involves changes that are not always happening at the local machine. Avid requires specific support for that from the storage host for it to enable that feature. Adobe on the other hand has implemented a simpler storage based solution, which is effective but not infallible, that works on any shared storage device that offers users write access.

A Note on iSCSI

iSCSI arrays offer some interesting possibilities for read only data, like source footage, as iSCSI gives block level access for maximum performance, and runs on any network without and expensive software. The only limit is that only one system can copy new media to the volume, and there must be a secure way to ensure the remaining systems have read-only access. Projects and exports must be stored elsewhere, but those files require much less capacity and bandwidth than source media. I have not had the opportunity to test out this hybrid SAN theory, as I don’t have iSCSI appliances to test with.

A Note on Top End Ethernet Options

40Gb Ethernet products have been available for a while, and we are now seeing 25GB and 100Gb Ethernet products as well. 40Gb cards can be gotten quite cheap, and I was tempted to use them for direct connect, hoping to see 4GB/s to share fast SSDs between systems. But 40Gb Ethernet is actually a trunk of four parallel 10Gb links, and each individual connection is limited to 10Gb. It is easy to share the 40Gb of aggregate bandwidth across 10 systems accessing a 40Gb storage host, but very challenging to get more than 10Gb to a single client system. Having extra lanes on the highway doesn’t get you to work any faster if there are no other cars on the road, it only helps when there is lots of competing traffic. 25Gb Ethernet on the other hand will give you access to nearly 3GB/s for single connections, but as that is newer technology, the prices haven’t come down yet. ($500 instead of $50 for a 10GbE direct link.) 100Gb Ethernet is four 25Gb links trunked together, and subject to the same aggregate limitations as 40Gb.

A Note on Backups

Backups are an important part of any storage solution. One option recommended for larger data sets is LTO, which I recently covered in a separate article. Other options are external hard drives, (usually USB3) or cloud based solutions, to ensure that you have a separate copy of your most important files if you primary storage solution experiences a failure.