I attended Adobe MAX at the LA Convention Center last week. This is my 6th time at MAX, and it has been interesting to see the event evolve over the years. This year’s primary focus was clearly on AI tools, both Generative AI, and AI agents. I had used Adobe’s existing Firefly tools to a limited degree over the past year or two, but never really dove into them, which I do intend to do now that they have matured so much in the most recent release.

Opening Keynote

Opening Keynote

The main event runs Tuesday to Thursday, with the Tuesday morning Keynote presentation in the Peacock Theater in downtown LA, broadcast across the internet with all of the main announcements. Over the course of the three hour event, a wide variety of speakers showed off new features in Adobe’s cloud based tool, as well as their mobile and desktop applications. I covered much of that news in last week’s article about Adobe’s software updates.

One of the big challenges of generative AI is, how do you effectively communicate your ideas to the computer, so that what it generates matches the vision in your head that you are trying to bring to life? This is the piece of the puzzle that Adobe has been hard at work at, and they had many new approaches to this issue to show off at the conference. There are many new generative AI options available on the Firefly platform, both from Adobe, and from third party partners, now integrated directly into their workflow. They also have AI agents for making recommendations within their desktop apps, or to even perform certain standard tasks for users. Intelligent automatic layer renaming was one of the most applauded features they showed, which isn’t as fancy or buzzy as the generative tools, but there are real users in the audience who do that stuff every day.

One of the big challenges of generative AI is, how do you effectively communicate your ideas to the computer, so that what it generates matches the vision in your head that you are trying to bring to life? This is the piece of the puzzle that Adobe has been hard at work at, and they had many new approaches to this issue to show off at the conference. There are many new generative AI options available on the Firefly platform, both from Adobe, and from third party partners, now integrated directly into their workflow. They also have AI agents for making recommendations within their desktop apps, or to even perform certain standard tasks for users. Intelligent automatic layer renaming was one of the most applauded features they showed, which isn’t as fancy or buzzy as the generative tools, but there are real users in the audience who do that stuff every day.

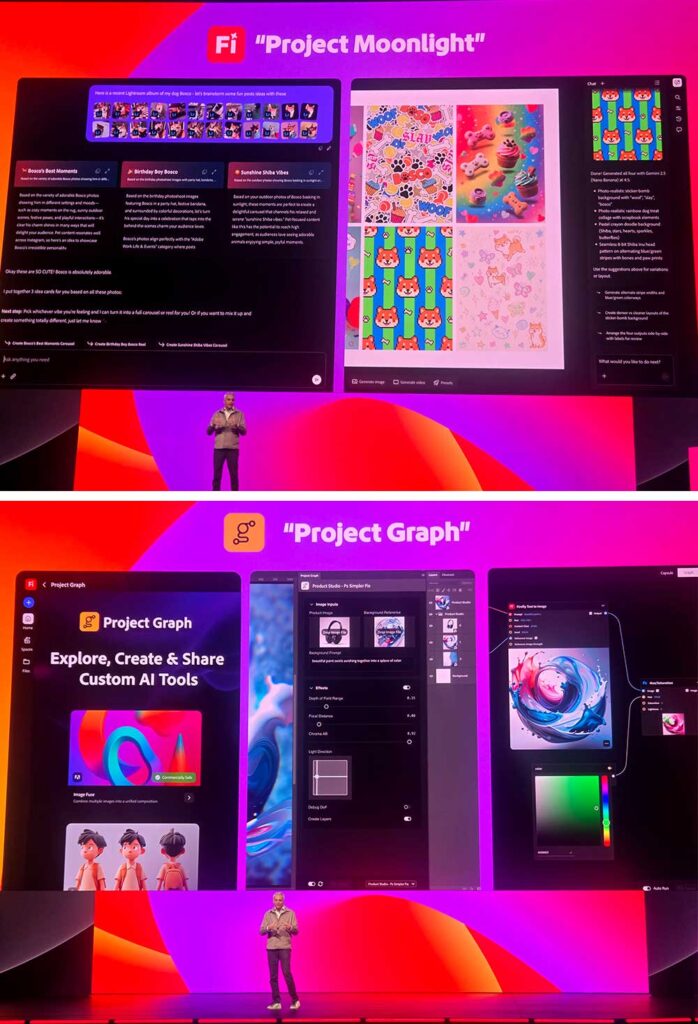

Adobe finished out the keynote with two technology demonstrations that aren’t yet integrated into products, to reveal what we should expect is coming next. Project Moonlight is for conversational assistance in creating media and getting it published. It can analyze your social media feeds, and offer insights about what is working and what isn’t, based on user engagement, and other metrics. Project Graph is a node based workflow building tool that appears to utilize API access to the processing tools in the various desktop apps, to allow users to string together a series of repeatable workflow steps to iterate content with their own consistent style, or to design prefabricated workflows for large pools of assets. It also included references to something called Flywheel, which appears to be a function for converting images into full 3D objects. I am still one step short of really embracing new 3D workflows in tools like After Effects, because I still have trouble generating the 3D assets I am looking for, but that might be the missing piece in my own workflow puzzle. Only time will tell.

Adobe finished out the keynote with two technology demonstrations that aren’t yet integrated into products, to reveal what we should expect is coming next. Project Moonlight is for conversational assistance in creating media and getting it published. It can analyze your social media feeds, and offer insights about what is working and what isn’t, based on user engagement, and other metrics. Project Graph is a node based workflow building tool that appears to utilize API access to the processing tools in the various desktop apps, to allow users to string together a series of repeatable workflow steps to iterate content with their own consistent style, or to design prefabricated workflows for large pools of assets. It also included references to something called Flywheel, which appears to be a function for converting images into full 3D objects. I am still one step short of really embracing new 3D workflows in tools like After Effects, because I still have trouble generating the 3D assets I am looking for, but that might be the missing piece in my own workflow puzzle. Only time will tell.

Creativity Park

Creativity Park

After the keynote, attendees can go to various sessions and labs where they can learn from other industry experts. They can also peruse the ‘Creativity Park,” an exhibition hall with booths for various Adobe products, as well as many third party partners. Dell and HP were showing off their newest computer systems and displays, AMD and NVidia both had booths highlighting their products and tools. Logitech was showing off the haptic feedback in their newest MX4 mouse, which is supported in Adobe applications, as well as last year’s MX Creative Console. LucidLink announced forthcoming support for directly accessing Frame.io assets, as read only volume mounted to your desktop. That feature, combined with existing CameraToCloud functionality, offers some really interesting streamlined remote workflow possibilities, which I am looking forward to trying out once it becomes available early next year.

Creativity Keynote

Creativity Keynote

Wednesday morning started with the Creativity Keynote, where attendees were back in the Peacock Theater to hear from three popular and successful Adobe users. I had never heard of Brandon Baum, but he has a popular VFX centric channel on YouTube. His presentation focused on tools as puzzle pieces, and it is the storyteller’s job to figure out how to fit the pieces together to suit the story they are trying to tell.  AI is a new puzzle piece, but not a whole new puzzle. He did a live demonstration of how to use Firefly to rapidly make a sequel to one of his recent videos. My main takeaways from that demonstration were that Firefly boards were far more important than I initially understood, as a way of tracking and sorting your AI generated assets in the cloud, without having to download and upload them locally. Either that is new, or I have been doing it wrong. He also had a cool work around for shorter AI transitions, which are currently locked to 5 seconds in Firefly. Telling it to create a “slow-mo” effect allows you to speed it up later, either in Premiere or the new FireFly video editor.

AI is a new puzzle piece, but not a whole new puzzle. He did a live demonstration of how to use Firefly to rapidly make a sequel to one of his recent videos. My main takeaways from that demonstration were that Firefly boards were far more important than I initially understood, as a way of tracking and sorting your AI generated assets in the cloud, without having to download and upload them locally. Either that is new, or I have been doing it wrong. He also had a cool work around for shorter AI transitions, which are currently locked to 5 seconds in Firefly. Telling it to create a “slow-mo” effect allows you to speed it up later, either in Premiere or the new FireFly video editor.

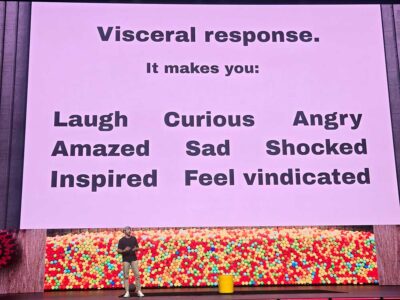

Next up was Mark Rober, who I was excited to hear from. A year ago I didn’t know who he was, but after him and Crunch Labs being the talk of the dinner table at Thanksgiving with the cousins, I see him everywhere. I signed up for his satellite photo stunt shortly after joining T-Mobile, and have seen a few of his videos. He started his presentation at an event about cloud computing, by making an actual cloud, in the auditorium.  Fortunately it didn’t rain on us. Mark talked about embedding educational lessons within his entertaining videos, and the importance of creating a visceral reaction with your content, if you want it to be seen and shared. His glitter bombs for porch pirates elicit strong reactions because viewers have a strong desire to see the thieves receive their comeuppance, and it satisfies a deep desire for justice. He talked about the importance of stories, and how much people connect with and learn from stories. The example he gave, about focusing on a single person, in a video about a company in Rwanda, happened to be one of the few of his videos that I had actually seen, so I had good context to what he was trying to convey.

Fortunately it didn’t rain on us. Mark talked about embedding educational lessons within his entertaining videos, and the importance of creating a visceral reaction with your content, if you want it to be seen and shared. His glitter bombs for porch pirates elicit strong reactions because viewers have a strong desire to see the thieves receive their comeuppance, and it satisfies a deep desire for justice. He talked about the importance of stories, and how much people connect with and learn from stories. The example he gave, about focusing on a single person, in a video about a company in Rwanda, happened to be one of the few of his videos that I had actually seen, so I had good context to what he was trying to convey.

The last speaker was writer and director James Gunn, being interviewed by Adobe’s Jason Levine. He talked about his early introductions to filmmaking, learning all of the various roles that needed to be filled on his early projects. His advice was, don’t follow your ‘dreams’ but find what you are the best at, and that you enjoy doing. A broad discovery phase is important to that process. His dream was in music, but his actual talent was elsewhere. You need talent, but you also have to be willing to work had, and stick with things until they are finished.

He also talked about how working with the same people repeatedly eliminates the learning curve of ‘figuring them out.’ If you know people, you know what works with them, and how to get the best out of them. You have to learn that about new people before you can really excel together. The Guardians of the Galaxy series is the only thing he has directed that I have actually seen, but he did talk about why he chose the music he did, which does stand out in the film, even for non-music people like myself. He chose popular songs from the 70’s because they would be familiar to viewers, and nothing else in an alternate universe outer space movie would be. There needed to be something familiar to allow the audience to connect with the characters, since they have nothing else in common. So the out-of-place familiar songs from the past served that function, which actually made sense to me. His closing recommendations were to live in the moment, and don’t let fear lead you away from doing what you love.

He also talked about how working with the same people repeatedly eliminates the learning curve of ‘figuring them out.’ If you know people, you know what works with them, and how to get the best out of them. You have to learn that about new people before you can really excel together. The Guardians of the Galaxy series is the only thing he has directed that I have actually seen, but he did talk about why he chose the music he did, which does stand out in the film, even for non-music people like myself. He chose popular songs from the 70’s because they would be familiar to viewers, and nothing else in an alternate universe outer space movie would be. There needed to be something familiar to allow the audience to connect with the characters, since they have nothing else in common. So the out-of-place familiar songs from the past served that function, which actually made sense to me. His closing recommendations were to live in the moment, and don’t let fear lead you away from doing what you love.

I had the opportunity to interview Jay LeBeouf, Adobe’s Head of AI Audio. We discussed the recently released audio tools on Firefly: text to speech, and soundtracks. He told me about Adobe’s focus on emotion in the dialog that gets generated, and giving users direct control over that. The new text-to-speech tools on Firefly can use Adobe’s commercially safe model, or Eleven Labs’ popular model with a selection of existing voice models. Neither model supports custom voice creation within Firefly yet, but I believe that is coming in some form in the future. Generate Soundtracks seems like the ideal tool for a non-music person like myself, who occasionally needs a soundtrack or background music for a video I am creating. Jay explained to me the great pains that Adobe had taken, both on the technical and the licensing end, over the last three years, to ensure that the music created with the Firefly model can be used universally on any project, without any artificial limits.

I had the opportunity to interview Jay LeBeouf, Adobe’s Head of AI Audio. We discussed the recently released audio tools on Firefly: text to speech, and soundtracks. He told me about Adobe’s focus on emotion in the dialog that gets generated, and giving users direct control over that. The new text-to-speech tools on Firefly can use Adobe’s commercially safe model, or Eleven Labs’ popular model with a selection of existing voice models. Neither model supports custom voice creation within Firefly yet, but I believe that is coming in some form in the future. Generate Soundtracks seems like the ideal tool for a non-music person like myself, who occasionally needs a soundtrack or background music for a video I am creating. Jay explained to me the great pains that Adobe had taken, both on the technical and the licensing end, over the last three years, to ensure that the music created with the Firefly model can be used universally on any project, without any artificial limits.

Sessions and Labs

I also attended a number of sessions and labs. Ben Insler offered an advanced course for Premiere and After Effects workflow integration. I attended more to see what they were teaching and how the audience responded, than to learn the content myself, because I am pretty familiar with the intersection of those two applications. I did learn a couple quick tips, but I was familiar with most of what was offered. But most of the audience was onboard, and following along with what he was doing, enough to offer resistance to some of his purportedly revolutionary ideas, like don’t name your comps and renders. I was familiar with most of his workflow concepts, but was glad to see them being explicitly taught to a wider audience. I had originally wanted to present a session like that a few years back, which is what eventually led to my “Pushing the Envelope with Premiere Pro” series of YouTube tutorials instead, because Adobe wasn’t ready for an advanced course on Premiere Pro at MAX. It was great to see a session like that finally happening, with users advanced enough to appreciate what was being taught.

I also went to my second presentation from AJ Bleyer, who blew me away last year with the short film he had created the night before the talk in Miami. Maybe my expectations were a little high going into that session this year, as it was a more conventional presentation of the new Firefly iterative gen AI workflow, but with AJ’s unique flavor of creativity and ingenuity. I am definitely looking forward to trying out some similar things myself.

Christine Steele’s Cinematic Techniques in Premiere was a very FX focused lab, which utilized the new object tracker. Eran Stern’s Branded Video Titles and Motion Graphic walked users through a single very well prepared After Effects project. Both of them are instructors I have attended in the past, and they continue to wrap the newest tools and approaches into the workflows they are presenting.

Adobe Sneaks

Adobe Sneaks

Adobe Sneaks was hosted by TV star Jessica Williams, whose reactions to what was demo’ed probably intimidated the presenting engineers even more than usual, but there were some interesting developments being showed off. It does become harder and harder to wow the audience in the age of AI, but Adobe’s engineers continue to find a way to do it, and the audience of experienced software operators better appreciates the subtle details than the average population would.

Most of the demos were extensions of generative AI removal and replacement tools, taken to the next level. Project Light Touch can re-light existing photos. Project TurnStyle is the image version of last year’s vector based Project TurnTable, which is now a headline new feature in Illustrator. Project Trace Erase is content-aware-fill on steroids. It removes the object in the user denoted area, but also finds and removes shadows and reflections outside the targeted area. When removing a light source from a photo, it also relights the scene accordingly, borrowing some functionality from Project Light Touch. Project New Depth is a tool for generatively editing radiance field 3D images. Even just creating radiance fields from a series of photos would be a useful tool that I haven’t seen in a simple form yet. Project Scene It combines image-to-3D with other generative AI tools, but just the image to 3D would be a useful tool right now. If I could take a photo of an object, convert it to a 3D object to use in After Effect for pre or post-viz effects, that would be an amazingly useful functionality.

Most of the demos were extensions of generative AI removal and replacement tools, taken to the next level. Project Light Touch can re-light existing photos. Project TurnStyle is the image version of last year’s vector based Project TurnTable, which is now a headline new feature in Illustrator. Project Trace Erase is content-aware-fill on steroids. It removes the object in the user denoted area, but also finds and removes shadows and reflections outside the targeted area. When removing a light source from a photo, it also relights the scene accordingly, borrowing some functionality from Project Light Touch. Project New Depth is a tool for generatively editing radiance field 3D images. Even just creating radiance fields from a series of photos would be a useful tool that I haven’t seen in a simple form yet. Project Scene It combines image-to-3D with other generative AI tools, but just the image to 3D would be a useful tool right now. If I could take a photo of an object, convert it to a 3D object to use in After Effect for pre or post-viz effects, that would be an amazingly useful functionality.

There were two Audio demos, Project Sound Stager was a combination AI assistant for sound design, directing the generative sound effects tools that are already available in Firefly. Project Clean Take was an impressive audio editor, which can break down an existing audio recording into its component sounds, like voice, background, music, effects, etc. Each of these intelligently broken out tracks can then be edited independently with the usual tools, or even generatively reinterpreted, in the case of AI dialog replacement and such. It is all the power of Enhance Audio with much more user control.

There were two Audio demos, Project Sound Stager was a combination AI assistant for sound design, directing the generative sound effects tools that are already available in Firefly. Project Clean Take was an impressive audio editor, which can break down an existing audio recording into its component sounds, like voice, background, music, effects, etc. Each of these intelligently broken out tracks can then be edited independently with the usual tools, or even generatively reinterpreted, in the case of AI dialog replacement and such. It is all the power of Enhance Audio with much more user control.

Project Frame Forward was the most impressive demo in my opinion, and is probably the next step in the path towards prompt-to-edit for video. You take a frame from an existing video and edit it in Photoshop, Firefly, or any other tool. Then this tool takes the original video and the modified frame, and attempts to apply the changes it recognizes in the frame, to the entire video. This makes sense to me as a logical way to communicate the artist’s intentions to the AI model. The resulting video is not merely a generation based on the edited frame and inspired by the motion in the input video. It appeared to pixel match the detail of the source video in the areas that hadn’t been changed, while generating imagery where things had been removed, and shifting the colors as needed to match the edited photo. Hopefully we see this functionality added to Firefly in the near future.

Some of the things that are shown at Sneaks are hard to imagine being packaged as final user products, but most of this year’s demos are very achievable. (Or Adobe has redefined my intuitive concept of achievable in the age of AI tools). I anticipate many of these functions, or at least parts of them, being available in Firefly before next year’s conference.

MAX Bash

MAX Bash

The MAX Bash is a big party that Adobe throws on the last night of the event, with live musical performances, unique food, and a variety of unusual creative activities to try out.  This year it was held in front of and around Crypto Arena, in the center of the LA Live complex. I am not one for big loud parties, but the MAX Bash has always had a large variety of things to explore and discover, hidden in a crowd of ten thousand people milling around. This year there was a human claw machine, where users controlled a winch that lowered a person into a pool of prizes to snatch one by hand, But beyond that attraction and its huge line, it was a pretty standard industry party, so not my favorite part of the conference.

This year it was held in front of and around Crypto Arena, in the center of the LA Live complex. I am not one for big loud parties, but the MAX Bash has always had a large variety of things to explore and discover, hidden in a crowd of ten thousand people milling around. This year there was a human claw machine, where users controlled a winch that lowered a person into a pool of prizes to snatch one by hand, But beyond that attraction and its huge line, it was a pretty standard industry party, so not my favorite part of the conference.

Overall it was a good show, and I learned a lot. In one sense I am an engineer attending an art conference, or more accurately an ‘artist’ conference, and while many people refer to me as a creative person, I believe innovative is usually a more accurate term for my strengths, less focused on the aesthetic. But attending MAX always gets me thinking of new things I could try with these tools that I am already pretty familiar with, which I believe is by design. I have a number of ideas to take home and try, as I attempt to figure out the best ways to leverage AI to improve my own projects and processes. And it will be interesting to see what else Adobe continues to develop.