Adobe’s creativity conference MAX took place at the LA Convention Center this week. This is the event that launches the next generation of Creative Cloud applications every year, and ten thousand users from all over converge at MAX to learn about the new tools that are being released. The main event ran Tuesday to Thursday, with the Tuesday morning Keynote presentation broadcast across the internet with all of the main announcements.

Unsurprisingly, most of those new announcements revolve around AI, and its further implementation throughout the Adobe ecosystem. And while AI is the buzzword across nearly every industry at the moment, it really can revolutionize media production and creativity, and Adobe’s approach to implementing AI has taken a while to come together, but it looks like that may finally be paying off. The two main areas where Adobe has been implementing AI solutions, are Generative AI, and AI agents providing access to existing tools through conversational experiences.

Unsurprisingly, most of those new announcements revolve around AI, and its further implementation throughout the Adobe ecosystem. And while AI is the buzzword across nearly every industry at the moment, it really can revolutionize media production and creativity, and Adobe’s approach to implementing AI has taken a while to come together, but it looks like that may finally be paying off. The two main areas where Adobe has been implementing AI solutions, are Generative AI, and AI agents providing access to existing tools through conversational experiences.

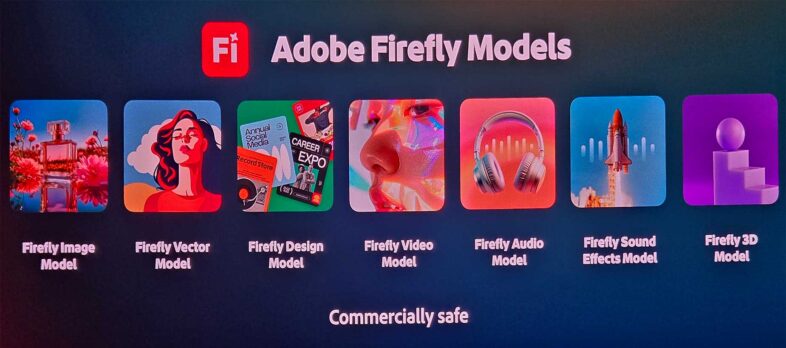

Firefly is the branding that Adobe chose for generative AI a few years ago, replacing their previous Sensei initiative, but now the Firefly term is used to label so many things, that it can be confusing to keep everything straight. Firefly is the name of their cloud based generative AI platform, which has now matured into its own media creation tool. But Adobe’s commercially safe generative imaging model, now on its fifth version, and available directly within Photoshop and Express, is also called Firefly. And the Firefly platform now supports other 3rd party generative AI models, which are not Firefly. So hopefully we will see something rebranded at some point, to keep things clearer for users.

The Firefly Image Model now has its 5th version in beta, which support 4 MegaPixel images, and also supports editing existing images, similar to Google’s ‘Nano Banana’ image model, which is also now integrated into the Firefly ‘platform,’ as part of Adobe’s new partnership with Google. These exist in Firefly alongside other models like ChatGPT, Flux, Runway, Topaz, and Eleven Labs, among others in a growing list. The image models available in Firefly are also directly accessible inside of Photoshop, via prompts, content aware fill, and even (in the case of Topaz Labs) in the scaling tools, for AI upres.

The Firefly Image Model now has its 5th version in beta, which support 4 MegaPixel images, and also supports editing existing images, similar to Google’s ‘Nano Banana’ image model, which is also now integrated into the Firefly ‘platform,’ as part of Adobe’s new partnership with Google. These exist in Firefly alongside other models like ChatGPT, Flux, Runway, Topaz, and Eleven Labs, among others in a growing list. The image models available in Firefly are also directly accessible inside of Photoshop, via prompts, content aware fill, and even (in the case of Topaz Labs) in the scaling tools, for AI upres.

The Firefly platform, available in the cloud at firefly.adobe.com, now supports many different types of generative AI (Image, video, audio, vector, 3D, etc.) and within those types, there are many different models available for users to choose from. And they are now adding support for users to create their own customized image models, so their AI generated assets will match their own existing personal or corporate style. These custom styles are based on 10-30 images, so very achievable for individual artists to experiment with. On a larger scale, the new Firefly Foundry allows fully customizable models for larger organizations, as an extension of Adobe’s GenStudio product for enterprise customers. Firefly Services and now Firefly Creative Production are tools that offer AI automated workflows for larger scale organizations, for creating different variations and formats of existing or new content.

The Firefly platform, available in the cloud at firefly.adobe.com, now supports many different types of generative AI (Image, video, audio, vector, 3D, etc.) and within those types, there are many different models available for users to choose from. And they are now adding support for users to create their own customized image models, so their AI generated assets will match their own existing personal or corporate style. These custom styles are based on 10-30 images, so very achievable for individual artists to experiment with. On a larger scale, the new Firefly Foundry allows fully customizable models for larger organizations, as an extension of Adobe’s GenStudio product for enterprise customers. Firefly Services and now Firefly Creative Production are tools that offer AI automated workflows for larger scale organizations, for creating different variations and formats of existing or new content.

Also on the Firefly platform, there are new generative AI audio tools, for creating musical soundtracks designed to match onscreen video, and text-to-speech voiceover models from Adobe and Eleven Labs. These will work similar to the existing voice-to-sound-effects tools, operating on an existing video asset, which may have been generated in other parts of the platform. This is where we see Adobe finally connecting the dots, allowing their tools to be used as a complete AI media creation solution. The Firefly platform now also supports other media creation functions, allowing users to further refine their generated media, stitching them into finished pieces for export and delivery. Users can generate an image from scratch, or from a couple of stick figure drawings, use that image as the basis for a video, further refine that video, add music, sound effects, and a voice over, all via generative AI tools, and with various levels of user input and creative control throughout the process.

Also on the Firefly platform, there are new generative AI audio tools, for creating musical soundtracks designed to match onscreen video, and text-to-speech voiceover models from Adobe and Eleven Labs. These will work similar to the existing voice-to-sound-effects tools, operating on an existing video asset, which may have been generated in other parts of the platform. This is where we see Adobe finally connecting the dots, allowing their tools to be used as a complete AI media creation solution. The Firefly platform now also supports other media creation functions, allowing users to further refine their generated media, stitching them into finished pieces for export and delivery. Users can generate an image from scratch, or from a couple of stick figure drawings, use that image as the basis for a video, further refine that video, add music, sound effects, and a voice over, all via generative AI tools, and with various levels of user input and creative control throughout the process.

One of the big challenges of generative AI is, how do you effectively communicate your ideas to the computer, so that what it generates matches the vision in your head that you are trying to bring to life? Obviously crafting long and complex prompts can be a part of that process, but once you have something you like, how do you refine it without starting over, and how do you incorporate existing content that you have, but need to alter? This is where Adobe’s toolset appears to be growing in useful and functional ways. Photoshop allows you to seamlessly integrate generative content with existing imagery, or even use AI to composite real images together with proper lighting, shadows, and reflections, via the new Harmonize function.

One of the big challenges of generative AI is, how do you effectively communicate your ideas to the computer, so that what it generates matches the vision in your head that you are trying to bring to life? Obviously crafting long and complex prompts can be a part of that process, but once you have something you like, how do you refine it without starting over, and how do you incorporate existing content that you have, but need to alter? This is where Adobe’s toolset appears to be growing in useful and functional ways. Photoshop allows you to seamlessly integrate generative content with existing imagery, or even use AI to composite real images together with proper lighting, shadows, and reflections, via the new Harmonize function.

Adobe Illustrator has numerous performance enhancements, to allow users to manipulate large complex documents with ease. The new ‘Turntable’ function can intelligently rotate flat vector objects, generating the needed depth geometry, as fully editable vectors. There is also a new live preview of complex brush drawing.

Lightroom has added features for AI dust removal, and distraction removal. It can now detect reflections in an image, and either remove or enhance them. There is also an AI assisted image culling feature, which operates on user defined parameters. It can also stack similar photos, with what it recommends as the best image of a set placed on top of each stack.

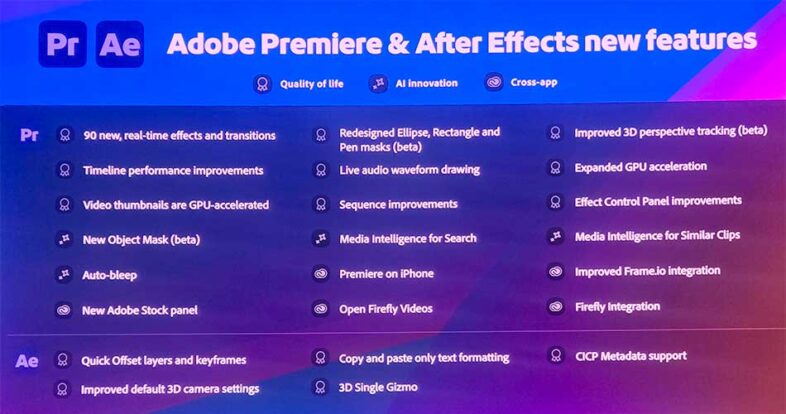

On the video front, the new AI object video masking tools in Premiere Pro allow you to integrate pieces of existing video shots into AI content, or even just into other video shots. And the Intelligent Media search uses AI to search for content in an existing video library, or a batch of fresh footage. There is also a new auto-bleep feature for censoring certain words in both audio and text transcripts.

On the video front, the new AI object video masking tools in Premiere Pro allow you to integrate pieces of existing video shots into AI content, or even just into other video shots. And the Intelligent Media search uses AI to search for content in an existing video library, or a batch of fresh footage. There is also a new auto-bleep feature for censoring certain words in both audio and text transcripts.

Also to the video topic, a recent announcement that I anticipated would be highlighted far more than it actually has been during the show, is Adobe’s recent release of Premiere for Mobile, which is currently available for iPhone, and coming for Android. They did demo the functionality within that new app during the Keynote, but there hasn’t been much other reference to it on the show floor. That seemed like the biggest announcement from a “Post Perspective” at the show.

Adobe Express is the lite app version of their creative tools for stills and video, which now integrates with Acrobat, and has a conversational AI assistant for conversational control. Express supports detailed templates, which allow skilled designers to create and lock in brand looks, and allow other employees to add content and data, to produce more specific and localized content without further supervision or approval. Adobe Express has also been integrated directly into ChatGPT, and is available as a visual tool directly within the prompt interface. And so has Photoshop, although the details on how that works were sparse.

Separately from ‘Generative’ AI, Adobe is also introducing their own AI assistants for various applications, to help their users better leverage the existing feature sets in those applications, and allow them to create content in a more natural and conversational way. AI can convert prompts into functions in Photoshop, make recommendations, or even automatically rename all of your hundreds of layers, based on the image content within them. Premiere’s Intelligent Media Search, and Lightroom’s image stacking and culling would fall under this umbrella as well, because nothing is being generated.

Separately from ‘Generative’ AI, Adobe is also introducing their own AI assistants for various applications, to help their users better leverage the existing feature sets in those applications, and allow them to create content in a more natural and conversational way. AI can convert prompts into functions in Photoshop, make recommendations, or even automatically rename all of your hundreds of layers, based on the image content within them. Premiere’s Intelligent Media Search, and Lightroom’s image stacking and culling would fall under this umbrella as well, because nothing is being generated.

Copyright has been an important aspect of the process from Adobe’s perspective, and their models are advertised to be safe for creating commercial content, although that doesn’t extend to the rest of the third party models available on the Firefly platform. It will be interesting to see how that is affected by the new option for customized models on the platform.

All in all, I am looking forward to trying out some of these new options on Firefly myself. I have a couple different projects in mind that I wouldn’t even attempt if it wasn’t for these new capabilities, and I want to see what I can come up with. And I am curious to see what other people are able to come up with on there as well.

All in all, I am looking forward to trying out some of these new options on Firefly myself. I have a couple different projects in mind that I wouldn’t even attempt if it wasn’t for these new capabilities, and I want to see what I can come up with. And I am curious to see what other people are able to come up with on there as well.